- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

CRAY XE6 Hardware and Architecture: Difference between revisions

From HLRS Platforms

Jump to navigationJump to search

| Line 38: | Line 38: | ||

=== Features === | === Features === | ||

* Cray Linux Environment (CLE) | * Cray Linux Environment (CLE) 4.? operating system | ||

* Operating System is based on SUSE Linux Enterprise Server (SLES) 11 | * Operating System is based on SUSE Linux Enterprise Server (SLES) 11 | ||

* Cray Gemini interconnection network | * Cray Gemini interconnection network | ||

Revision as of 14:57, 6 October 2011

Hardware of Installation step 1

- 3552 compute nodes / 113.664 cores

- dual socket G34

- 2x AMD Opteron(tm) 6200 Series Processor (Interlagos) with 16 Cores @ 2.3 GHz

- 32MB L2+L3 Cache, 16MB L3 Cache

- HyperTransport HT3, 6.4GT/s=102.4 GB/s

- per socket ~150 GFLOP/s

- 32GB memory and 64GB memory nodes

- dual socket G34

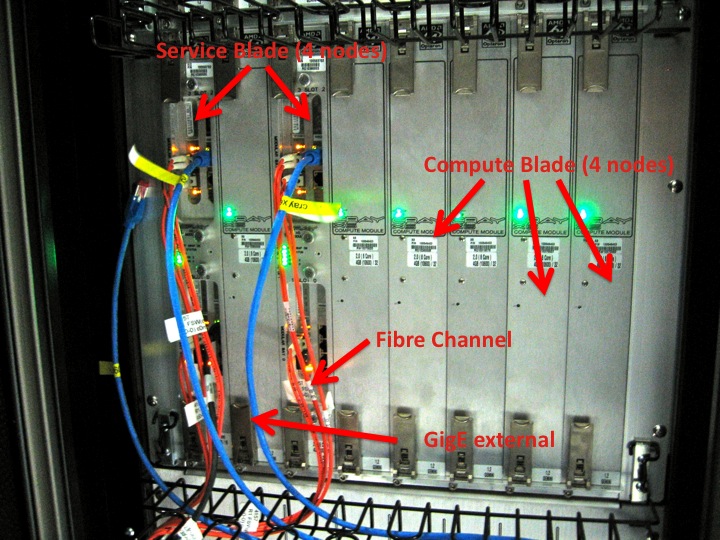

- 96 service nodes (Network nodes, mom nodes, router nodes, DVS nodes, boot, database, syslog)

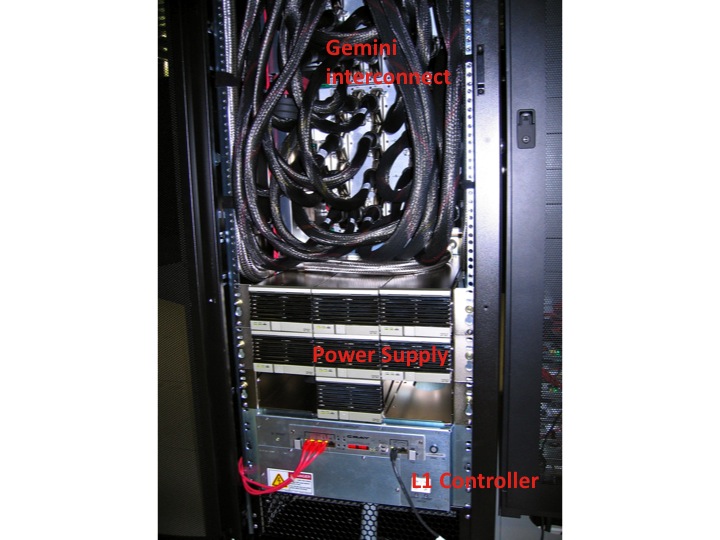

- High Speed Network CRAY Gemini

- Parallel Filesystem

- Lustre

- capacity 2.7 PB

- IO bandwith ~150GB/s

- infrastructure servers

- Users HOME filesystem ~60TB (BLUEARC mercury 55)

- special user nodes:

- external login servers

- pre-post processing nodes

- remote visualization nodes

Architecture

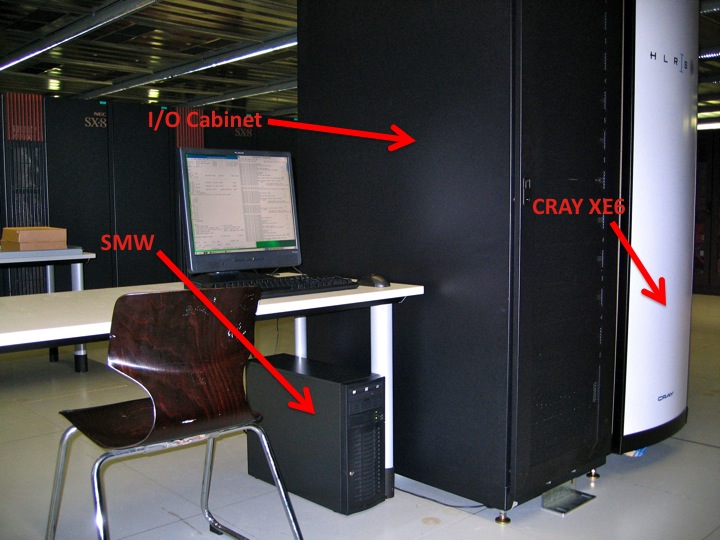

- System Management Workstation (SMW)

- system administrator's console for managing a Cray system like monitoring, installing/upgrading software, controls the hardware, starting and stopping the XE6 system.

- service nodes are classified in:

- login nodes for users to access the system

- boot nodes which provides the OS for all other nodes, licenses,...

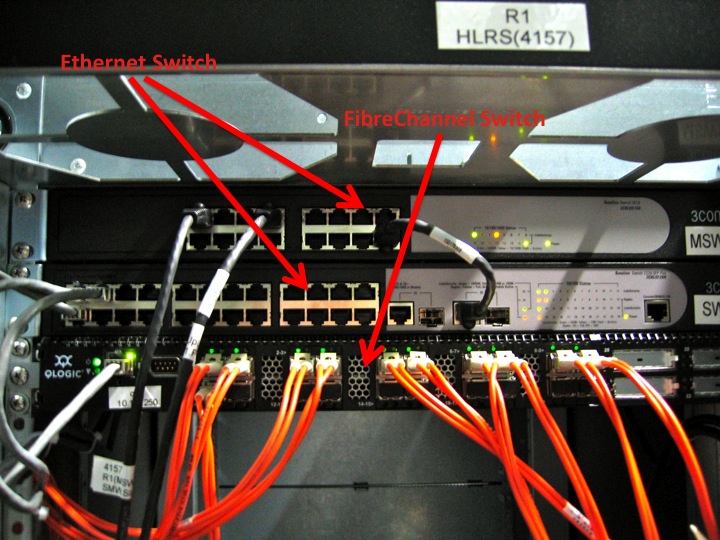

- network nodes which provides e.g. external network connections for the compute nodes

- Cray Data Virtualization Service (DVS): is an I/O forwarding service that can parallelize the I/O transactions of an underlying POSIX-compliant file system.

- sdb node for services like ALPS, torque, moab, cray management services,...

- I/O nodes for e.g. lustre

- MOM (torque) nodes for placing user jobs of the batch system in to execution

- compute nodes and pre-post processing nodes

- are only available for user using the batch system and the Application Level Placement Scheduler (ALPS), see running applications.

Features

- Cray Linux Environment (CLE) 4.? operating system

- Operating System is based on SUSE Linux Enterprise Server (SLES) 11

- Cray Gemini interconnection network

- Cluster Compatibility Mode (CCM) functionality enables cluster-based independent software vendor (ISV) applications to run without modification on Cray systems.

- Batch System: torque, moab

- many development tools available:

- Compiler: Cray, PGI, GNU,

- Debugging: DDT, ATP,...

- Performance Analysis: CrayPat, Cray Apprentice, PAPI

- Libraries: BLAS, LAPACK, FFTW, PETSc, MPT,....