- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

Vampir: Difference between revisions

m (→Usage) |

No edit summary |

||

| Line 36: | Line 36: | ||

For large-scale traces (up to many thousand MPI processes), use the parallel VampirServer (on compute nodes allocated through the queuing system), and attach to it using vampir: | For large-scale traces (up to many thousand MPI processes), use the parallel VampirServer (on compute nodes allocated through the queuing system), and attach to it using vampir: | ||

{{Command|command= | {{Command|command=<nowiki>qsub -I -lnodes=16:nehalem:ppn=8,walltime=1:0:0 | ||

> qsub -I -lnodes=16:nehalem:ppn=8,walltime=1:0:0 | |||

qsub: waiting for job 297851.intern2 to start | qsub: waiting for job 297851.intern2 to start | ||

qsub: job 297851.intern2 ready | qsub: job 297851.intern2 ready | ||

| Line 47: | Line 46: | ||

Licensed to HLRS | Licensed to HLRS | ||

Running 255 analysis processes. | Running 255 analysis processes. | ||

Server listens on: n010802:30000 | Server listens on: n010802:30000</nowiki> | ||

}} | }} | ||

then on the head-node use the Vampir-GUI to "Remote open" the same file by attaching to the heavy-weight, compute nodes: | then on the head-node use the Vampir-GUI to "Remote open" the same file by attaching to the heavy-weight, compute nodes: | ||

Revision as of 16:09, 10 January 2012

| The Vampir suite of tools offers scalable event analysis through a nice GUI which enables a fast and interactive rendering of very complex performance data. The suite consists of Vampirtrace, Vampir and Vampirserver). Ultra large data volumes can be analyzed with a parallel version of Vampirserver, loading and analysing the data on the compute nodes with the GUI of Vampir attaching to it.

Vampir is based on standard QT and works on desktop Unix workstations as well as on parallel production systems. The program is available for nearly all platforms like Linux-based PCs and Clusters, IBM, SGI, SUN. NEC, HP, and Apple. |

| ||||||||||||

Usage

In order to use Vampir, You first need to generate a trace of Your application, preferrably using VampirTrace. This trace consists of a file for each MPI process and an OTF-file (Open Trace Format) describing the other files. Using environment variables (starting with VT_):

Please note, that being an MPI-tool, VampirTrace is dependent not only on the compiler being used, but also on the MPI-version.

You may recompile, specifying the amount of instrumentation You want to include, e.g. -vt:mpi for MPI instrumentation, -vt:compinst for compiler-based function instrumentation:

To analyse Your application, for small traces (<16 processes, only few GB of trace data), use vampir standalone

For large-scale traces (up to many thousand MPI processes), use the parallel VampirServer (on compute nodes allocated through the queuing system), and attach to it using vampir:

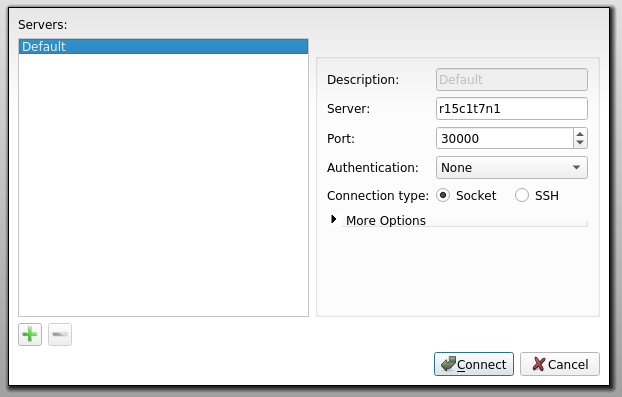

then on the head-node use the Vampir-GUI to "Remote open" the same file by attaching to the heavy-weight, compute nodes:

Please note, that vampirserver-core is memory-bound and may work best if started with only one MPI process per node, or one per socket, e.g. use Open MPI's option to mpirun -bysocket.