- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

Communication on Cray XC40 Aries network: Difference between revisions

No edit summary |

|||

| (8 intermediate revisions by 2 users not shown) | |||

| Line 9: | Line 9: | ||

== XC40 design == | == XC40 design == | ||

To understand the problem, a basic knowledge of the communication mechanism of the Cray XC is necessary. The communication on the Cray XC runs over the Cray Interconnect, which is implemented via the Aries network chip. | To understand the problem, a basic knowledge of the communication mechanism of the Cray XC is necessary. The communication on the Cray XC runs over the Cray Interconnect, which is implemented via the Aries network chip. | ||

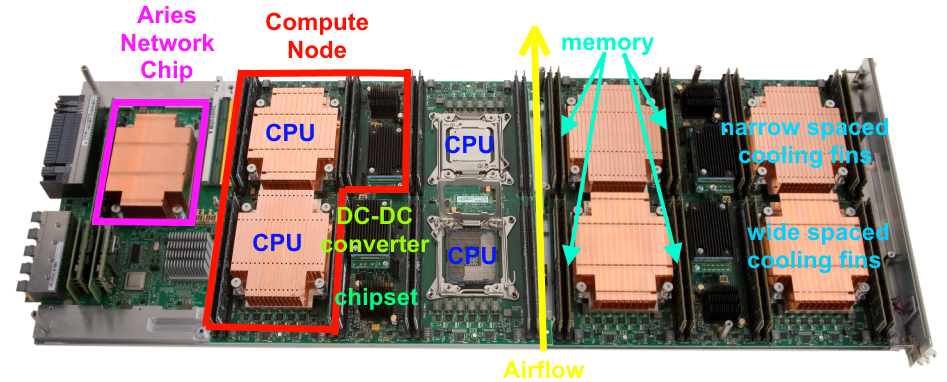

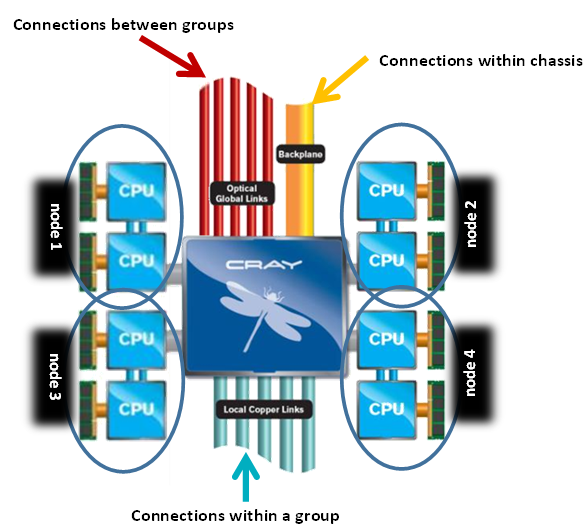

* 4 Nodes ( | * 4 Nodes (compute nodes) share one single Aries network chip. Following pictures a compute blade is shown in structure and logically. | ||

* 16 Aries | * 16 Blades,each with a single Aries chip, are mounted in a ''chassis'' and all-to-all connected over the so called ''backplane''. | ||

* 3 of these chassis are mounted in a cabinet (rack) and 2 cabinets (6 chassis) build a ''Cabinet Group''. Connections within these group is realized using copper cables. | * 3 of these chassis are mounted in a cabinet (rack) and 2 cabinets (6 chassis) build a ''Cabinet Group''. Connections within these group is realized using copper cables. | ||

* The cabinet groups are interconnected via optical cables (see picture). | * The cabinet groups are interconnected via optical cables (see picture). | ||

[[Image:Aries_Connections.png]] | <div><ul> | ||

<li style="display: inline-block;"> [[File:Hazelhen-blade_structure.png|frame|compute blade (structure)]] </li> | |||

<li style="display: inline-block;"> [[Image:Aries_Connections.png|frame|compute blade (logical view)]] </li> | |||

</ul></div> | |||

== Cray XC communication mechanism == | == Cray XC communication mechanism == | ||

| Line 30: | Line 33: | ||

* In addition, Cray has optimized the MPI_Alltoall routines and it is recommended to re-compile the application if these routines are used directly. | * In addition, Cray has optimized the MPI_Alltoall routines and it is recommended to re-compile the application if these routines are used directly. | ||

== Module change == | == Module environment change and testing == | ||

Starting with the system upgrade 4./5. January 2016, HugePages are be set as default on all Cray nodes. This change is required since the effects of the overloading of the Aries network chips was that drastically. Cray prepared this change intensively to identify and eliminate possible sources of problems. | |||

You can test HugePages | |||

* | You can test different HugePages by | ||

* re-link you application (with a loaded HugePage module), | |||

* switching to a certain HugePages module (module switch craype-hugepages16MB craype-hugepagesXXX, where XXX is a size greater equal 1M) in your batch submission script (not necessarily the size of the one used for linking) | |||

For more information see [[ HugePages#How to use HugePages? | How to use HugePages]]. | For more information see [[ HugePages#How to use HugePages? | How to use HugePages]]. | ||

Please report issues with compiling, running and performance to the [http://www.hlrs.de/trouble-ticket-submission-form/ ticket system]. | |||

== Feedback == | == Feedback == | ||

Latest revision as of 10:31, 22 February 2016

UNDER CONSTRUCTION

Communication in the Cray XC40 using the Aries network chip and the impact of HugePages

Introduction / Motivation

Since upgrading Hornet to Hazelhen, performance variations have been reported increasingly. Some of these variations are due to IO, but not all. HLRS and Cray have analyzed this issue. We can explain it and also offer a workaround that reduces the problem. These will be presented after a brief explanation of the communication problem.

XC40 design

To understand the problem, a basic knowledge of the communication mechanism of the Cray XC is necessary. The communication on the Cray XC runs over the Cray Interconnect, which is implemented via the Aries network chip.

- 4 Nodes (compute nodes) share one single Aries network chip. Following pictures a compute blade is shown in structure and logically.

- 16 Blades,each with a single Aries chip, are mounted in a chassis and all-to-all connected over the so called backplane.

- 3 of these chassis are mounted in a cabinet (rack) and 2 cabinets (6 chassis) build a Cabinet Group. Connections within these group is realized using copper cables.

- The cabinet groups are interconnected via optical cables (see picture).

Cray XC communication mechanism

- The communication is done, by transferring data cache coherent from the main memory of the source node into the main memory of the destination node.

- The Aries chip has to translate the logical address to a physical memory address. Memory is managed by the System in memory pages (Pages).

- To avoid lots of these calculations, some values are stored in an internal table within the Aries chip, similar to a TLB of a processor. If the value is not present in this table, it must be recalculated, resulting in a reduced performance.

Timing variation problem

To reduce this problem, configurable Page sizes have been introduced to Linux systems a few years ago. By the use of greater Pages (default is 4096 bytes), the addressable memory space, which is available without address translation to disposal, increased. Thereby the number of necessary conversions is reduced. On the Cray XC40 we discovered that the Aries network chip could be overload by very high volume of communications in combination with the default 4k Pages. This communication overload is caused especially by All2all and All2one communication schemes. Although, one communication process can keep the Aries network chip busy with recalculating the addresses, consuming almost all of its resources. As a result, communications from other nodes, which also use this chip for communication, slow down.

- It is now proven that a strong performance intrusion occurs when an Aries chip is used by different applications and one of these generates such a load.

- The load increases with the global number of participating MPI communication processes. With Hazel Hen the numbers of nodes is almost doubled, and the priority is set on large jobs for academic user. As a result, many jobs are running with > 1000 nodes.

- Starting with the system upgrade, many user requests or user complaints arise dealing with unpredictable run-time behavior of their applications, in ranges more than 100%. It is verified that this can be avoided using HugePages.

- Two kinds of applications will benefit from HugePages. On one hand, if they use intense global communication routines. And on the other hand, if they do not use such routines, but using a network chip which is effected by global communications of other applications. Setting HugePages as default ensures that the Aries network chip can handle more tasks than converting addresses for global communication.

- In addition, Cray has optimized the MPI_Alltoall routines and it is recommended to re-compile the application if these routines are used directly.

Module environment change and testing

Starting with the system upgrade 4./5. January 2016, HugePages are be set as default on all Cray nodes. This change is required since the effects of the overloading of the Aries network chips was that drastically. Cray prepared this change intensively to identify and eliminate possible sources of problems.

You can test different HugePages by

- re-link you application (with a loaded HugePage module),

- switching to a certain HugePages module (module switch craype-hugepages16MB craype-hugepagesXXX, where XXX is a size greater equal 1M) in your batch submission script (not necessarily the size of the one used for linking)

For more information see How to use HugePages. Please report issues with compiling, running and performance to the ticket system.

Feedback

We welcome your feedback related to experience with compiling and performance using HugePages. More about pages and HugePages is described at: | Pages@Wiki