- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

Hawk PrePostProcessing: Difference between revisions

No edit summary |

No edit summary |

||

| (37 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

For pre- and post-processing purposes with large memory requirements multi user smp nodes are available. These nodes are reachable via the smp queue. Please see the [[Batch_System_PBSPro_(Hawk)#Node_types|node types]], [[Batch_System_PBSPro_(Hawk)#Examples_for_starting_batch_jobs:|examples]] and [[Batch_System_PBSPro_(Hawk)#smp|smp]] sections of the [[Batch_System_PBSPro_(Hawk)|batch system documentation]]. | For pre- and post-processing purposes with large memory requirements multi user smp nodes are available. These nodes are reachable via the smp queue. Please see the [[Batch_System_PBSPro_(Hawk)#Node_types|node types]], [[Batch_System_PBSPro_(Hawk)#Examples_for_starting_batch_jobs:|examples]] and [[Batch_System_PBSPro_(Hawk)#smp|smp]] sections of the [[Batch_System_PBSPro_(Hawk)|batch system documentation]]. | ||

= Remote Visualization = | == Remote Visualization == | ||

Since | Since Hawk is not equipped with Graphic Hardware, the visualization has to be done remotely either in the Vulcan cluster or in locally installed clients. | ||

=== Paraview === | |||

ParaView server is installed on Hawk with mpi support for parallel execution and rendering on the Apollo 9000 compute nodes as well as the Apollo 6500 compute nodes with GPU acceleration. This means the Qt based graphical user interface (GUI) is not available i.e. the <code>paraview</code> command and the ParaView client. Scripted usage is possible via <code>pvbatch</code>. For interactive parallel post-processing and visualization <code>pvserver</code> has to be used. | |||

To enable parallel data processing and rendering on the Apollo 9000 CPU compute nodes, ParaView is installed with the mesa llvm gallium pipe. The installations are available via the module environment. | |||

<pre> | |||

module load paraview-server[/<version>-mesa] | |||

</pre> | |||

To do parallel rendering on the GPU accelerated Apollo 6500 nodes, the EGL enabled version of ParaView is installed. The installations are available via the module environment. | |||

<pre> | |||

module load paraview-server[/<version>-egl] | |||

</pre> | |||

==== Client-Server Execution using the Vulcan Cluster==== | |||

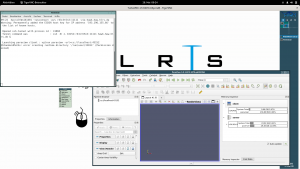

Graphical ParaView clients matching the server versions on Hawk are installed on the [[Vulcan|Vulcan]] cluster. For efficient connection we recommend to use the <code>vis_via_vnc.sh</code> script available via the VirtualGL module on Vulcan. More information can be found at [[Graphic_Environment]] | |||

[[File:Vnc desktop session.png|thumb]] | |||

===== Setting up the VNC desktop session ===== | |||

<pre> | |||

ssh vulcan.hww.hlrs.de | |||

module load VirtualGL | |||

vis_via_vnc.sh | |||

</pre> | |||

Please note that this will reserve a standard visualization node in Vulcan for one hour. Additional nodes with other graphic hardware are available and the reservation time can of course be increased. for a full list of options please use | |||

<pre> | |||

vis_via_vnc.sh --help | |||

</pre> | |||

To connect to the vnc session on the visualization node '''from your local client computer''' use one of the methodologies issued by the vis_via_vnc.sh script. The recommended way is to use a TigerVNC viewer or TurboVNC viewer with the <code>-via</code> option. | |||

<pre> | |||

vncviewer -via <user_name>@cl5fr2.hww.hlrs.de <node_name>:<display_number> | |||

</pre> | |||

After successful connection you should be logged in to a remote Xvnc desktop session. | |||

===== Setting up and executing a pvserver on Apollo 9000 nodes ===== | |||

For parallel execution a regular compute node job has to be requested on Hawk. E.g. | |||

<pre> | |||

qsub -l select=2:node_type=rome:mpiprocs=128,walltime=01:00:00 -I | |||

</pre> | |||

Once logged in to the interactive compute node job load the paraview-server module and launch <code>pvserver</code>. | |||

<pre> | |||

module load paraview-server[/<version>] | |||

mpirun -n 256 pvserver | |||

</pre> | |||

If <code>pvserver</code> was launched successfully it should issue the connection details for the paraview client. E.g.: | |||

<pre> | |||

s32979 r41c2t6n4 203$ mpirun -n 256 pvserver | |||

Waiting for client... | |||

Connection URL: cs://r41c2t6n4:11111 | |||

Accepting connection(s): r41c2t6n4:11111 | |||

</pre> | |||

===== Setting up and executing a pvserver on Apollo 6500 nodes ===== | |||

For parallel execution a compute node job on the Apollo 6500 nodes has to be requested on Hawk. E.g. | |||

<pre> | |||

qsub -l select=2:node_type=rome-ai:mpiprocs=128,walltime=01:00:00 -I | |||

</pre> | |||

Once logged in to the interactive compute node job load the paraview-server module and launch <code>pvserver</code>. Please notice that for parallel rendering the EGL device numbers have to be specified via the pvserver's <code>--displays</code> option. | |||

<pre> | |||

module load paraview-server[/<version>] | |||

mpirun -n 128 pvserver --displays='0,1,2,3,4,5,6,7' : -n 128 pvserver --displays='0,1,2,3,4,5,6,7' | |||

</pre> | |||

The example above will launch a pvserver an two nodes using 128 cores on the CPU and 8 GPUs on each node. If <code>pvserver</code> was launched successfully it should issue the connection details for the paraview client. E.g.: | |||

<pre> | |||

s32979 r41c2t6n4 203$ mpirun -n 256 pvserver | |||

Waiting for client... | |||

Connection URL: cs://r41c2t6n4:11111 | |||

Accepting connection(s): r41c2t6n4:11111 | |||

</pre> | |||

===== Connection of ParaView Client and Server via pvconnect ===== | |||

[[File:ParaView client connected to pvserver.png|thumb|ParaView Client connected to pvserver]] | |||

To connect a graphical ParaView client executed within the vnc session on Vulcan with the server running on the Hawk compute nodes a ssh tunnel has to be established. For convenience we provide in the client installation the <code>pvconnect</code> script. Please note that the script assumes that public / provate key, ssh connection without password is possible between Vulcan and Hawk. | |||

The script takes two arguments. | |||

#<code>-pvs</code> is the combination <code><servername>:<portnumber></code> isseued during pvserver startup under <code>Accepting connection(s):</code>. | |||

#<code>-via</code> is the hostname of the machine over which to tunnel onto the pv server host. | |||

Usually this is hawk.hww.hlrs.de. So taking the example from the server startup given above, to launch a ParaView client within a VNC desktop session and directly connect it to a running server on Hawk compute nodes use: | |||

<pre> | |||

module load paraview | |||

pvconnect -pvs r41c2t6n4:11111 -via hawk.hww.hlrs.de | |||

</pre> | |||

The connection and memory load on the server can be checked within the client by activating the Memory Inspector via the <code>View</code> menu within the ParaView client. | |||

==== Client-Server Execution using a local client ==== | |||

It is also possible to connect a locally running ParaView to a server running on Hawk. For that we recommend to compile a matching ParaView client on your local machine. | |||

===== ParaView Installation ===== | |||

So either check out the correct ParaView sources version from the ParaView git repositories or download the source tar archive we provide in the ParaView intallation directory on Hawk. With the ParaView module loaded the sources can be found in | |||

<pre> | |||

$PARAVIEW_SRC | |||

</pre> | |||

On your local machine, extract the archive, define your installation location | |||

<pre> | |||

export PV_PREFIX=<path_to_installation_directory> | |||

</pre> | |||

Setup your build directory | |||

<pre> | |||

cd ParaView/ | |||

export PV_VERSION=`git describe --tags` | |||

echo $PV_VERSION | |||

cd .. | |||

mkdir ParaView_${PV_VERSION}_build_client | |||

cd ParaView_${PV_VERSION}_build_client | |||

</pre> | |||

Configure the ParaView installation process with cmake | |||

<pre> | |||

cmake ../ParaView \ | |||

-DCMAKE_BUILD_TYPE=Release \ | |||

-DCMAKE_CXX_COMPILER=c++ \ | |||

-DCMAKE_C_COMPILER=gcc \ | |||

-DCMAKE_Fortran_COMPILER=gfortran \ | |||

-DPARAVIEW_USE_PYTHON=ON \ | |||

-DPARAVIEW_INSTALL_DEVELOPMENT_FILES=ON \ | |||

-DPARAVIEW_BUILD_SHARED_LIBS=ON \ | |||

-DBUILD_TESTING=OFF \ | |||

-DCMAKE_INSTALL_PREFIX=$PV_PREFIX/paraview/${PV_VERSION}/client | |||

</pre> | |||

in case you would like to use Ray Traced Rendering please install OSPRay on your local machine and add the following two lines to the cmake command | |||

<pre> | |||

-DPARAVIEW_ENABLE_RAYTRACING=ON \ | |||

-Dospray_DIR=<path_to_ospray_installation>/lib/cmake/ospray-2.9.0 | |||

</pre> | |||

in which <code><path_to_ospray_installation></code> has to be replaced with the path to your local OSPRay installation. At HLRS we use the precompiled binary distributions available at [https://www.ospray.org/downloads.html]. After successful configuration build and install with | |||

<pre> | |||

make -j 20 | |||

make install | |||

</pre> | |||

And finally extend your PATH and LD_LIBRARY_PATH environment variables: | |||

<pre> | |||

export PATH=$PV_PREFIX/paraview/${PV_VERSION}/client/bin:$PATH | |||

export LD_LIBRARY_PATH=$PV_PREFIX/paraview/${PV_VERSION}/client/lib64:$LD_LIBRARY_PATH | |||

</pre> | |||

The newly installed client should now be executable. | |||

===== Using pvconnect locally ===== | |||

On the Vulcan cluster we provide the pvconnect script to set up a ssh tunnel, launch a ParaView client and directly connect it to a pvserver running on Hawk. The script is also usable from a local client if the <code>nettest</code> utility is available. Simply copy the code below into a local file and connect to a running pvserver [[Hawk PrePostProcessing#Connection of ParaView Client and Server via pvconnect|as described above]]. | |||

<pre> | |||

#!/bin/bash | |||

############################################################################### | |||

# pvconnect | |||

# -------------- | |||

# The script opens a ssh-tunnel to a given compute node via a mom node and | |||

# launches a paraview client with the correct -url option | |||

############################################################################### | |||

usage() | |||

{ | |||

echo | |||

echo "USAGE: $0" | |||

echo " -pvs pvserver[:port] -via host" | |||

echo | |||

echo "-pvs = ParaView server to connect to. Either the hostname alone or " | |||

echo " hostname:port can be given. The hostname:port combination is" | |||

echo " normally returned the pvserver by the comment:" | |||

echo " Accepting connection(s): hostname:port" | |||

echo " if no port is given the default port 11111 is used" | |||

echo "-via = Hostname via which to connect to the server. If the server was" | |||

echo " launched by aprun this should be the name of the mom node" | |||

echo " on which the aprun was executed." | |||

echo | |||

exit $1 | |||

} | |||

# | |||

if [ $# -eq 0 ]; then | |||

usage 0 | |||

fi | |||

# | |||

port=`nettest -findport` | |||

# | |||

# ----------------------------------------------------------------------------- | |||

# Parse Arguments ------------------------------------------------------------- | |||

while [ $# -gt 0 ] | |||

do | |||

case "$1" in | |||

-pvs*) acc_con=$2; shift ;; | |||

-via*) VIA=$2; shift ;; | |||

-help*) usage 0;; | |||

--help*) usage 0;; | |||

*) break ;; | |||

esac | |||

shift | |||

done | |||

# | |||

if [ -z $acc_con ]; then | |||

echo "!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!" | |||

echo "!! Argument for ParaView server is missing !!" | |||

echo "!! Please specify the -pvs option !!" | |||

echo "!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!" | |||

exit 1 | |||

fi | |||

# | |||

if [ -z $VIA ]; then | |||

echo "!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!" | |||

echo "!! Found no host to connect through in arguments !!" | |||

echo "!! Please specify the -via option !!" | |||

echo "!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!" | |||

exit 1 | |||

fi | |||

# | |||

# ----------------------------------------------------------------------------- | |||

# Get target and port --------------------------------------------------------- | |||

TARGET=`echo $acc_con | cut -d: -f1` | |||

PVP=`echo $acc_con | cut -d: -f2` | |||

# | |||

if [ -z $PVP ]; then | |||

PVP=11111 | |||

echo " " | |||

echo " Found no ParaView server port in arguments" | |||

echo " Using the default one: 11111" | |||

fi | |||

# | |||

# ----------------------------------------------------------------------------- | |||

# Open tunnel ----------------------------------------------------------------- | |||

ssh -N -L $port:$TARGET:$PVP $VIA & | |||

sshpid=$! | |||

# | |||

sleep 10 | |||

# | |||

echo " " | |||

echo " Opened ssh-tunnel with process id : ${sshpid}" | |||

echo " Tunnel command was : ssh -N -L $port:$TARGET:$PVP $VIA &" | |||

# | |||

# ----------------------------------------------------------------------------- | |||

# Launch paraview client ------------------------------------------------------ | |||

echo " " | |||

echo " Launching paraview client : vglrun paraview -url=cs://localhost:$port" | |||

paraview -url=cs://localhost:$port | |||

# | |||

kill ${sshpid} | |||

# | |||

</pre> | |||

==== Usage examples ==== | |||

In this section small usage examples are documented | |||

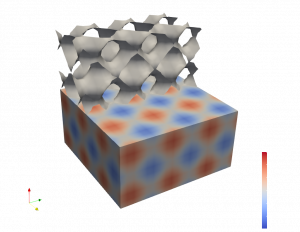

===== XDMF ===== | |||

Since ParaView can not load HDF5 files directly due to missing possibility to annotate topological information in a standardized way one has to provide an XDMF header file to explain to ParaView the purpose of the datasets contained within an HDF5 file. | |||

[[File:Test.xdmf.png.png|thumb]] | |||

With the following python script a 3D dataset is created by means of h5py and written to file. | |||

<pre> | |||

import h5py | |||

import numpy as np | |||

a = np.arange(8000,dtype=np.float64).reshape(20, 20, 20) | |||

for i in range(a.shape[0]): | |||

for j in range(a.shape[1]): | |||

for k in range(a.shape[2]): | |||

a[i][j][k] = np.sin(i)+np.sin(j)+np.sin(k) | |||

h5f = h5py.File('data.h5', 'w') | |||

h5f.create_dataset('dataset_3D', data=a) | |||

h5f.close() | |||

</pre> | |||

The corresponding topological information, that the contained dataset represent the node data of a 3D uniform Cartesian grid with origin [0,0,0] and spacing of 1.0 and 20 grid points in each dimension can be specified with the following XDMF header. | |||

<pre> | |||

<?xml version="1.0" encoding="utf-8"?> | |||

<Xdmf xmlns:xi="http://www.w3.org/2001/XInclude" Version="3.0"> | |||

<Domain> | |||

<Grid Name="Grid"> | |||

<Geometry Origin="" Type="ORIGIN_DXDYDZ"> | |||

<DataItem DataType="Float" Dimensions="3" Format="XML" Precision="8">0 0 0</DataItem> | |||

<DataItem DataType="Float" Dimensions="3" Format="XML" Precision="8">1 1 1</DataItem> | |||

</Geometry> | |||

<Topology Dimensions="20 20 20" Type="3DCoRectMesh"/> | |||

<Attribute Center="Node" Name="Data" Type="Scalar"> | |||

<DataItem DataType="Float" Dimensions="20 20 20" Format="HDF">data.h5:/dataset_3D</DataItem> | |||

</Attribute> | |||

</Grid> | |||

</Domain> | |||

</Xdmf> | |||

</pre> | |||

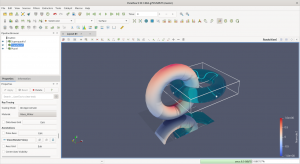

===== OSPRay Rendering ===== | |||

[[File:ParaView-OSPRay-Mat-Selection.png|thumb|ParaView Material Selection and Ray Tracing Demo]] | |||

From ParaView Version 5.10 onwards the OSPRay ray tracing renderer is compiled into client and server. With the ParaView module loaded a material database provided by [https://gitlab.kitware.com/paraview/materials] under creative commons (CC0) copyright can be found under | |||

<pre> | |||

$PARAVIEW_MAT | |||

</pre> | |||

Once the <code>ospray_mats.json</code> file is loaded via <code>Load Path Tracer Materials...</code> from the File menu, a Material can be selected in the <code>Ray Tracing</code> section of the Properties Panel. The material is displayed, i.e. rendered in the 3D scene once the <code>Enable Ray Tracing</code> check box in the <code>Ray Traced rendering</code> section of the Properties Panel is activated and <code>OSPRay pathtracer</code> is selected from the <code>Back End</code> dropdown menu. | |||

Please note hat OSPRay is currently only available in the client and mesa version of the installations. | |||

Latest revision as of 11:27, 23 September 2022

For pre- and post-processing purposes with large memory requirements multi user smp nodes are available. These nodes are reachable via the smp queue. Please see the node types, examples and smp sections of the batch system documentation.

Remote Visualization

Since Hawk is not equipped with Graphic Hardware, the visualization has to be done remotely either in the Vulcan cluster or in locally installed clients.

Paraview

ParaView server is installed on Hawk with mpi support for parallel execution and rendering on the Apollo 9000 compute nodes as well as the Apollo 6500 compute nodes with GPU acceleration. This means the Qt based graphical user interface (GUI) is not available i.e. the paraview command and the ParaView client. Scripted usage is possible via pvbatch. For interactive parallel post-processing and visualization pvserver has to be used.

To enable parallel data processing and rendering on the Apollo 9000 CPU compute nodes, ParaView is installed with the mesa llvm gallium pipe. The installations are available via the module environment.

module load paraview-server[/<version>-mesa]

To do parallel rendering on the GPU accelerated Apollo 6500 nodes, the EGL enabled version of ParaView is installed. The installations are available via the module environment.

module load paraview-server[/<version>-egl]

Client-Server Execution using the Vulcan Cluster

Graphical ParaView clients matching the server versions on Hawk are installed on the Vulcan cluster. For efficient connection we recommend to use the vis_via_vnc.sh script available via the VirtualGL module on Vulcan. More information can be found at Graphic_Environment

Setting up the VNC desktop session

ssh vulcan.hww.hlrs.de module load VirtualGL vis_via_vnc.sh

Please note that this will reserve a standard visualization node in Vulcan for one hour. Additional nodes with other graphic hardware are available and the reservation time can of course be increased. for a full list of options please use

vis_via_vnc.sh --help

To connect to the vnc session on the visualization node from your local client computer use one of the methodologies issued by the vis_via_vnc.sh script. The recommended way is to use a TigerVNC viewer or TurboVNC viewer with the -via option.

vncviewer -via <user_name>@cl5fr2.hww.hlrs.de <node_name>:<display_number>

After successful connection you should be logged in to a remote Xvnc desktop session.

Setting up and executing a pvserver on Apollo 9000 nodes

For parallel execution a regular compute node job has to be requested on Hawk. E.g.

qsub -l select=2:node_type=rome:mpiprocs=128,walltime=01:00:00 -I

Once logged in to the interactive compute node job load the paraview-server module and launch pvserver.

module load paraview-server[/<version>] mpirun -n 256 pvserver

If pvserver was launched successfully it should issue the connection details for the paraview client. E.g.:

s32979 r41c2t6n4 203$ mpirun -n 256 pvserver Waiting for client... Connection URL: cs://r41c2t6n4:11111 Accepting connection(s): r41c2t6n4:11111

Setting up and executing a pvserver on Apollo 6500 nodes

For parallel execution a compute node job on the Apollo 6500 nodes has to be requested on Hawk. E.g.

qsub -l select=2:node_type=rome-ai:mpiprocs=128,walltime=01:00:00 -I

Once logged in to the interactive compute node job load the paraview-server module and launch pvserver. Please notice that for parallel rendering the EGL device numbers have to be specified via the pvserver's --displays option.

module load paraview-server[/<version>] mpirun -n 128 pvserver --displays='0,1,2,3,4,5,6,7' : -n 128 pvserver --displays='0,1,2,3,4,5,6,7'

The example above will launch a pvserver an two nodes using 128 cores on the CPU and 8 GPUs on each node. If pvserver was launched successfully it should issue the connection details for the paraview client. E.g.:

s32979 r41c2t6n4 203$ mpirun -n 256 pvserver Waiting for client... Connection URL: cs://r41c2t6n4:11111 Accepting connection(s): r41c2t6n4:11111

Connection of ParaView Client and Server via pvconnect

To connect a graphical ParaView client executed within the vnc session on Vulcan with the server running on the Hawk compute nodes a ssh tunnel has to be established. For convenience we provide in the client installation the pvconnect script. Please note that the script assumes that public / provate key, ssh connection without password is possible between Vulcan and Hawk.

The script takes two arguments.

-pvsis the combination<servername>:<portnumber>isseued during pvserver startup underAccepting connection(s):.-viais the hostname of the machine over which to tunnel onto the pv server host.

Usually this is hawk.hww.hlrs.de. So taking the example from the server startup given above, to launch a ParaView client within a VNC desktop session and directly connect it to a running server on Hawk compute nodes use:

module load paraview pvconnect -pvs r41c2t6n4:11111 -via hawk.hww.hlrs.de

The connection and memory load on the server can be checked within the client by activating the Memory Inspector via the View menu within the ParaView client.

Client-Server Execution using a local client

It is also possible to connect a locally running ParaView to a server running on Hawk. For that we recommend to compile a matching ParaView client on your local machine.

ParaView Installation

So either check out the correct ParaView sources version from the ParaView git repositories or download the source tar archive we provide in the ParaView intallation directory on Hawk. With the ParaView module loaded the sources can be found in

$PARAVIEW_SRC

On your local machine, extract the archive, define your installation location

export PV_PREFIX=<path_to_installation_directory>

Setup your build directory

cd ParaView/

export PV_VERSION=`git describe --tags`

echo $PV_VERSION

cd ..

mkdir ParaView_${PV_VERSION}_build_client

cd ParaView_${PV_VERSION}_build_client

Configure the ParaView installation process with cmake

cmake ../ParaView \

-DCMAKE_BUILD_TYPE=Release \

-DCMAKE_CXX_COMPILER=c++ \

-DCMAKE_C_COMPILER=gcc \

-DCMAKE_Fortran_COMPILER=gfortran \

-DPARAVIEW_USE_PYTHON=ON \

-DPARAVIEW_INSTALL_DEVELOPMENT_FILES=ON \

-DPARAVIEW_BUILD_SHARED_LIBS=ON \

-DBUILD_TESTING=OFF \

-DCMAKE_INSTALL_PREFIX=$PV_PREFIX/paraview/${PV_VERSION}/client

in case you would like to use Ray Traced Rendering please install OSPRay on your local machine and add the following two lines to the cmake command

-DPARAVIEW_ENABLE_RAYTRACING=ON \

-Dospray_DIR=<path_to_ospray_installation>/lib/cmake/ospray-2.9.0

in which <path_to_ospray_installation> has to be replaced with the path to your local OSPRay installation. At HLRS we use the precompiled binary distributions available at [1]. After successful configuration build and install with

make -j 20 make install

And finally extend your PATH and LD_LIBRARY_PATH environment variables:

export PATH=$PV_PREFIX/paraview/${PV_VERSION}/client/bin:$PATH

export LD_LIBRARY_PATH=$PV_PREFIX/paraview/${PV_VERSION}/client/lib64:$LD_LIBRARY_PATH

The newly installed client should now be executable.

Using pvconnect locally

On the Vulcan cluster we provide the pvconnect script to set up a ssh tunnel, launch a ParaView client and directly connect it to a pvserver running on Hawk. The script is also usable from a local client if the nettest utility is available. Simply copy the code below into a local file and connect to a running pvserver as described above.

#!/bin/bash

###############################################################################

# pvconnect

# --------------

# The script opens a ssh-tunnel to a given compute node via a mom node and

# launches a paraview client with the correct -url option

###############################################################################

usage()

{

echo

echo "USAGE: $0"

echo " -pvs pvserver[:port] -via host"

echo

echo "-pvs = ParaView server to connect to. Either the hostname alone or "

echo " hostname:port can be given. The hostname:port combination is"

echo " normally returned the pvserver by the comment:"

echo " Accepting connection(s): hostname:port"

echo " if no port is given the default port 11111 is used"

echo "-via = Hostname via which to connect to the server. If the server was"

echo " launched by aprun this should be the name of the mom node"

echo " on which the aprun was executed."

echo

exit $1

}

#

if [ $# -eq 0 ]; then

usage 0

fi

#

port=`nettest -findport`

#

# -----------------------------------------------------------------------------

# Parse Arguments -------------------------------------------------------------

while [ $# -gt 0 ]

do

case "$1" in

-pvs*) acc_con=$2; shift ;;

-via*) VIA=$2; shift ;;

-help*) usage 0;;

--help*) usage 0;;

*) break ;;

esac

shift

done

#

if [ -z $acc_con ]; then

echo "!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!"

echo "!! Argument for ParaView server is missing !!"

echo "!! Please specify the -pvs option !!"

echo "!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!"

exit 1

fi

#

if [ -z $VIA ]; then

echo "!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!"

echo "!! Found no host to connect through in arguments !!"

echo "!! Please specify the -via option !!"

echo "!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!"

exit 1

fi

#

# -----------------------------------------------------------------------------

# Get target and port ---------------------------------------------------------

TARGET=`echo $acc_con | cut -d: -f1`

PVP=`echo $acc_con | cut -d: -f2`

#

if [ -z $PVP ]; then

PVP=11111

echo " "

echo " Found no ParaView server port in arguments"

echo " Using the default one: 11111"

fi

#

# -----------------------------------------------------------------------------

# Open tunnel -----------------------------------------------------------------

ssh -N -L $port:$TARGET:$PVP $VIA &

sshpid=$!

#

sleep 10

#

echo " "

echo " Opened ssh-tunnel with process id : ${sshpid}"

echo " Tunnel command was : ssh -N -L $port:$TARGET:$PVP $VIA &"

#

# -----------------------------------------------------------------------------

# Launch paraview client ------------------------------------------------------

echo " "

echo " Launching paraview client : vglrun paraview -url=cs://localhost:$port"

paraview -url=cs://localhost:$port

#

kill ${sshpid}

#

Usage examples

In this section small usage examples are documented

XDMF

Since ParaView can not load HDF5 files directly due to missing possibility to annotate topological information in a standardized way one has to provide an XDMF header file to explain to ParaView the purpose of the datasets contained within an HDF5 file.

With the following python script a 3D dataset is created by means of h5py and written to file.

import h5py

import numpy as np

a = np.arange(8000,dtype=np.float64).reshape(20, 20, 20)

for i in range(a.shape[0]):

for j in range(a.shape[1]):

for k in range(a.shape[2]):

a[i][j][k] = np.sin(i)+np.sin(j)+np.sin(k)

h5f = h5py.File('data.h5', 'w')

h5f.create_dataset('dataset_3D', data=a)

h5f.close()

The corresponding topological information, that the contained dataset represent the node data of a 3D uniform Cartesian grid with origin [0,0,0] and spacing of 1.0 and 20 grid points in each dimension can be specified with the following XDMF header.

<?xml version="1.0" encoding="utf-8"?>

<Xdmf xmlns:xi="http://www.w3.org/2001/XInclude" Version="3.0">

<Domain>

<Grid Name="Grid">

<Geometry Origin="" Type="ORIGIN_DXDYDZ">

<DataItem DataType="Float" Dimensions="3" Format="XML" Precision="8">0 0 0</DataItem>

<DataItem DataType="Float" Dimensions="3" Format="XML" Precision="8">1 1 1</DataItem>

</Geometry>

<Topology Dimensions="20 20 20" Type="3DCoRectMesh"/>

<Attribute Center="Node" Name="Data" Type="Scalar">

<DataItem DataType="Float" Dimensions="20 20 20" Format="HDF">data.h5:/dataset_3D</DataItem>

</Attribute>

</Grid>

</Domain>

</Xdmf>

OSPRay Rendering

From ParaView Version 5.10 onwards the OSPRay ray tracing renderer is compiled into client and server. With the ParaView module loaded a material database provided by [2] under creative commons (CC0) copyright can be found under

$PARAVIEW_MAT

Once the ospray_mats.json file is loaded via Load Path Tracer Materials... from the File menu, a Material can be selected in the Ray Tracing section of the Properties Panel. The material is displayed, i.e. rendered in the 3D scene once the Enable Ray Tracing check box in the Ray Traced rendering section of the Properties Panel is activated and OSPRay pathtracer is selected from the Back End dropdown menu.

Please note hat OSPRay is currently only available in the client and mesa version of the installations.