- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

Graphic Environment

For graphical pre- and post-processing purposes 6 visualisation nodes have been integrated into the Nehalem cluster. The nodes are equipped with a nVIDIA Quadro 5800 FX with 4GB graphics memory, 1 quad core CPU and 24 GB of main memory. Access to the nodes is possible by using the node feature vis.

user@cl3fr1>qsub -I -lnodes=1:vis

To use the graphic hardware for remote rendering there are currently two ways tested. The first is via TurboVNC the second one is directly via VirtualGL.

VNC Setup

If you want to use the Nehalem Graphic Hardware e.g. for graphical pre- and post-processing, on way to do it is via a vnc-connection. For that purpose you have to download/install a VNC viewer to/on your local machine.

E.g. TurboVNC comes as a pre-compiled package which can be downloaded from http://www.virtualgl.org/Downloads/TurboVNC. For Fedora 13 the package tigervnc is available via yum installer.

Other VNC viewers can also be used but we recommend the usage of TurboVNC, an accelerated version of TightVNC designed for video and 3D applications, since we run the TurboVNC server on the visualisation nodes and the combination of the two components will give the best performance for GL applications.

Preparation of Nehalem Cluster

Before using vnc for the first time you have to log on to the Nehalem frontend and run

vncpasswd

which creates you a .vnc directory in your home containing a passwd file.

Starting the TurboVNC server

To start a TurboVNC server you simply have to login to the cluster frontend cl3fr1.hww.de via ssh.

user@client>ssh cl3fr1.hww.de

Then load the VirtualGL module to setup the TurboVNC environment and start the TurboVNC server.

user@cl3fr1>module load tools/VirtualGL user@cl3fr1>vis_via_vnc.sh XX:XX:XX

Where XX:XX:XX is the required walltime for your visualisation job.

The vis_via_vnc.sh script returns the name of the node reserved for you, a display number and the IP-address.

VNC viewer with -via option

If you got a VNC viewer which supports the via option like TurboVNC or TigerVNC you can simply call the viewer like stated below

user@client>vncviewer -via cl3fr1.hww.de <node name>:<Display#>

VNC viewer without -via option

If you got a VNC viewer without support of the vis option, like JavaVNC or TightVNC for Windows, you have to setup a ssh tunnel via the frontend first and then launch the VNC viewer with a connection to localhost

user@client>ssh -N -L10000:<IP-address of vis-node>:5901 & user@client>vncviewer localhost:10000

Enter the vnc password you set on the frontend and you should get a Gnome session running in your VNC viewer.

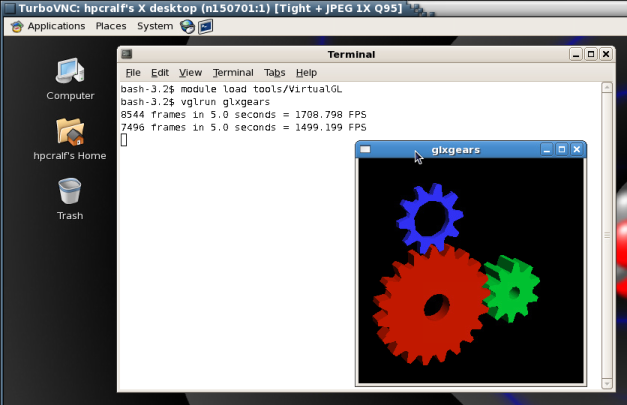

GL applications within the VNC session

To execute 64Bit GL Applications within this session you have to open a shell and again load the VirtualGL module to set the VirtualGL environment and then start the application with the VirtualGL wrapper command

vglrun <Application command>

Ending the vnc session

If the vnc session isn't needed any more and the requested walltime isn't expired already you should kill your queue job with

user@cl3fr1>qdel <jour_job_id>

VirtualGL Setup (WITHOUT turbovnc)

To use VirtualGL you have to install it on your local client. It is available in the form of pre-compiled packages at http://www.virtualgl.org/Downloads/VirtualGL.

Linux

After the installation of VirtualGL you can connect to cl3fr1.hww.de via the vglconnect command

user@client>vglconnect -s cl3fr1.hww.de

Then call the prepare the visualisation queue job and connect to the reserved visualisation node via the vglconnect command

user@cl3fr1>module load tools/VirtualGL user@cl3fr1>vis_via_vgl.sh XX:XX:XX user@cl3fr1>vglconnect -s n150701

where XX:XX:XX is the walltime required for your visualisation job.

On the visualisation node you can then execute 64Bit GL applications with the VirtualGL wrapper command

user@vis-node>module load tools/VirtualGL user@vis-node>vglrun <Application command>