- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

CPE

From HLRS Platforms

Jump to navigationJump to search

Cray Programming Environment User Guide: CSM for HPE Cray EX Systems HPE CPE User Guide

CPE on Hawk

Loading and Unloading CPE

$ module avail CPE CPE/22.11 (D) CPE/23.12 $ module load CPE Lmod is automatically replacing "hlrs-software-stack/current" with "CPE/22.11". $ module -t list |& sort cce/15.0.0 .cpe/22.11 CPE/22.11 cray-dsmml/0.2.2 cray-libsci/22.11.1.2 cray-mpich-ucx/8.1.21 cray-pals/1.2.4 craype/2.7.19 craype-network-ucx craype-x86-rome perftools-base/22.09.0 PrgEnv-cray/8.3.3 system/site_names system/wrappers/1.0 system/ws/8b99237 $ module load hlrs-software-stack +gcc +mpt Lmod is automatically replacing "CPE/22.11" with "hlrs-software-stack/current".

General Notes

- Use the compiler drivers

cc,CC, andftnto compile and link C, C++, and Fortran code. If you want to see which tools are invoked or which flags and options are passed, compile with-vor check the output of--cray-print-opts=[cflags|libs|all].

- Cray compilers can generate detailed yet readable optimization reports. Try out

-fsave-loopmark(C/C++) or-rm(Fortran) and inspect the resulting listings files (*.lst).

- The Cray Fortran compiler is a highly optimizing compiler that performs extensive analyses at

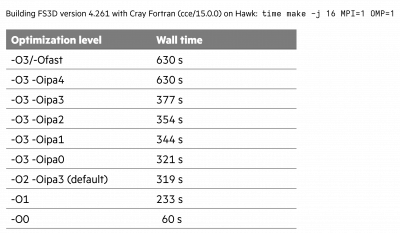

-O2or greater. If you value short compile times during development, consider dialing down the optimization level to-O1or-O0, or see if-Oipa1or-Oipa0makes a difference. Be aware that the default optimization level is-O2(and-Oipa3), not-O0.

Below is an experiment to see the impact of different-Ooptions on the compilation time of a medium-sized code base (FS3D in this example):

- To clarify compiler messages, warnings, errors, and runtime errors, use

explain, for example,explain ftn-2103for more information about Fortran warning 2103.

man intro_directivesgives an overview of pragmas and directives supported by the Cray compilers, should you look for specific extensions.

- Prefer

mpiexectompirunin PBS batch scripts, unless you make sure that aliases are expanded, usingshopt -s expand_aliases, for example.

man mpiexeccontains a few examples of binding processes and threads to CPUs. Use--cpu-bind depth -d $OMP_NUM_THREADSfor hybrid programs. In general, we recommend to play around with xthi to make sure you achieve your preferred binding.

- The Parallel Application Launch Service (PALS) is not configured to allow launching applications on login nodes. You can do

mpirunormpiexeconly on compute nodes.

- The environment variable PE_ENV can be used to determine which PrgEnv-<env> is active. If something requires Cray compilers, for example, you can test if

[ "$PE_ENV" = "CRAY" ].

- In some cases, it is necessary to prepend CRAY_LD_LIBRARY_PATH to LD_LIBRARY_PATH, in particular when you want to use a non-default version of a dynamic library that is not present in /opt/cray/pe/lib64. (Note: This requires more than one version of CPE installed on the system.) If

ldd <exe>reports missing dependencies, check ifLD_LIBRARY_PATH=$CRAY_LD_LIBRARY_PATH:$LD_LIBRARY_PATH ldd <exe>resolves them.

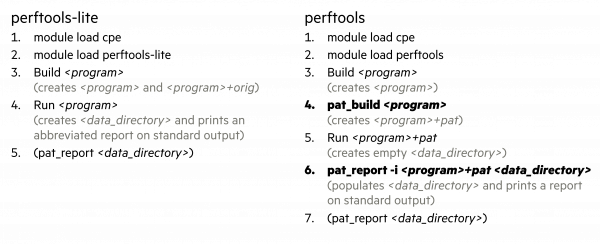

- The Cray Performance Analysis Tools (CrayPat) include a simplified, easy-to-use interface called perftools-lite, which automates a few tasks for you, namely instrumentation and report generation. Below are the basic steps that you would follow when using perftools-lite or perftools, with extra steps required by perftools bolded:

- When running

pat_reporton an empty experiment data directory, include-i <exe>to specify where your instrumented executable<exe>can be found. This is more robust than relying on a recorded path, which may not exist anymore at the time of runningpat_report. You won't need this option if you launch your instrumented program withmpiexec --no-transfer.

- The HDF5 tools in /opt/cray/pe/hdf5-parallel/1.12.2.1/bin depend on libmpi_gnu_91.so. To use these tools, switch to PrgEnv-gnu or prepend /opt/cray/pe/mpich/8.1.21/ucx/gnu/9.1/lib to LD_LIBRARY_PATH, for example, like this:

LD_LIBRARY_PATH=/opt/cray/pe/mpich/8.1.21/ucx/gnu/9.1/lib:$LD_LIBRARY_PATH h5dump --version.

Known Issues and Solutions/Workarounds

- Using PrgEnv-gnu and gcc/12.1.0, you might see ld complain about being "unable to initialize decompress status for section .debug_info" during linking. This warning goes away after loading an older version of gcc, for instance, gcc/10.3.0. Likewise, if you have trouble generating debugging information (

-g), consider switching to an older version of gcc.

- Using PrgEnv-intel and intel/2022.0.2 or intel-oneapi/2022.0.2, you will see ftn (ifort or ifx) warn about "overriding '-march=core-avx2' with '-march=core-avx2'". The reason is that

-march=core-avx2is indeed passed twice, by the installation's ifort.cfg or ifx.cfg and by CPE's x86-rome CPU target. You can ignore this warning.

- Some runtime libraries, such as libcrayacc, depend on libcuda, which is not available everywhere. If you want to cross-compile OpenACC or OpenMP GPU code on a login node, invoke the linker with

--allow-shlib-undefined(-Wl,--allow-shlib-undefined) to allow undefined symbols in shared objects. The resulting executable will run on an AI node.

- If you try to populate an empty experiment data directory using

pat_report, butpat_reportcan't open the instrumented executable, referring to a file in /var/run/palsd/... that no longer exists, invokepat_reportwith-i <exe>as suggested above, or usempiexec --no-transferto run your experiment.

- [Fixed in CPE 23.12] Version 22.09 of CrayPat (perftools and perftools-lite) has a bug in combination with PBS Pro and PALS that can cause different node names to map to the same node ID (a deficiency in CrayPat's internal hashing of node names). When this happens, it affects not only the summary printed by

pat_report("Numbers of PEs per Node"), but also rank order suggestions ("No rank order was suggested because all ranks are on one node."). Fortunately, there exists a workaround that involves converting node names to node IDs ourselves and setting SLURM_NODEID. Using xthi as an example, instead of doingmpirun [...] ./xthi, we can use a small utility, like /sw/hawk-rh8/hpe/cpe/utils/hash_node_name.py, and dompirun [...] bash -c 'SLURM_NODEID=$(python3 hash_node_name.py) ./xthi', or create and "mpirun" a wrapper script.

Below is an example invocation ofmpirunto profile a perftools-instrumented program<PROGRAM>+pat(created bypat_build <PROGRAM>):

Note: This issue is fixed in version 23.12 of CrayPat, so once you switch to CPE 23.12, you won't need this workaround anymore.

- We have seen a few cases where running perftools(-lite)-instrumented programs crash with HDF5 errors when using cray-hdf5-parallel or segfault when performing collective I/O, whereas uninstrumented versions of the programs run without problems. These issues seem to be sampling related, so the easiest workaround is to perform a tracing experiment, for example, using perftools-lite-events instead of perftools-lite.

Known Issues and Current Limitations

- The PGAS programming models (Coarrays and UPC) are not supported on craype-network-ucx.

- The debugging tools, including cray-stat and gdb4hpc, have received a number of important bugfixes and improvements in more recent releases of CPE. We will likely need to upgrade CPE to make these tools work properly.

A Minimal PBS Batch Script

MPI/OpenMP

#!/usr/bin/env bash

#PBS -N test

#PBS -l select=8:ncpus=128:mpiprocs=32:ompthreads=4

#PBS -l walltime=01:00:00

shopt -s expand_aliases # or use `mpiexec` instead of `mpirun`

module load CPE

cd "$PBS_O_WORKDIR"

NUM_PROCS="$(wc -l < "$PBS_NODEFILE")"

mpirun -np $NUM_PROCS \

--cpu-bind depth -d $OMP_NUM_THREADS \

<PROGRAM> <ARGS>