- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

CRAY XC40 Using the Batch System

Introduction

The only way to start a parallel job on the compute nodes of this system is to use the batch system. The installed batch system is based on

- the resource management system torque and

- the scheduler moab

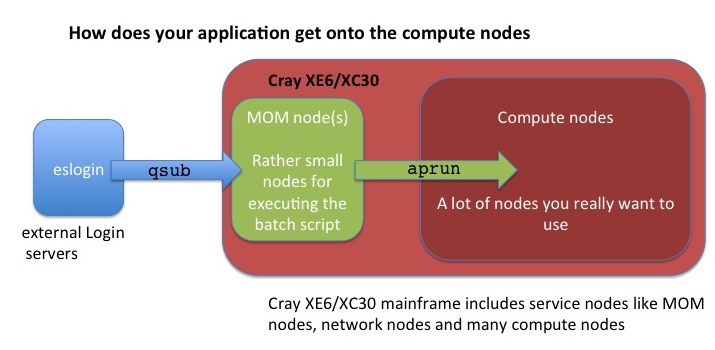

Additional you have to know on CRAY XE6/XC30 the user applications are always launched on the compute nodes using the application launcher, aprun, which submits applications to the Application Level Placement Scheduler (ALPS) for placement and execution.

Detailed information for CRAY XE6 about how to use this system and many examples can be found in Cray Application Developer's Environment User's Guide and Workload Management and Application Placement for the Cray Linux Environment.

Detailed information for CRAY XC30 about how to use this system and many examples can be found in Cray Programming Environment User's Guide and Workload Management and Application Placement for the Cray Linux Environment.

- ALPS is always used for scheduling a job on the compute nodes. It does not care about the programming model you used. So we need a few general definitions :

- PE : Processing Elements, basically an Unix ‘Process’, can be a MPI Task, CAF image, UPC tread, ...

- numa_node The cores and memory on a node with ‘flat’ memory access, basically one of the 2 Dies on the Intel and the direct attach memory.

- Thread A thread is contained inside a process. Multiple threads can exist within the same process and share resources such as memory, while different PEs do not share these resources. Most likely you will use OpenMP threads.

- aprun is the ALPS (Application Level Placement Scheduler) application launcher

- It must be used to run application on the XC compute nodes interactively and in a batch job

- If aprun is not used, the application is launched on the MOM node (and will most likely fail)

- aprun man page contains several useful examples at least 3 important parameter to control:

- The total number of PEs: -n

- The number of PEs per node: -N

- The number of OpenMP threads: -d (the 'stride' between 2 PEs in a node

- qsub is the torque submission command for batch job scripts.

Writing a submission script is typically the most convenient way to submit your job to the batch system.

You generally interact with the batch system in two ways: through options specified in job submission scripts (these are detailed below in the examples) and by using torque or moab commands on the login nodes. There are three key commands used to interact with torque:

- qsub

- qstat

- qdel

Check the man page of torque for more advanced commands and options

man pbs

Submitting a Job / allocating resources

Batch Mode

Production jobs are typically run in batch mode. Batch scripts are shell scripts containing flags and commands to be interpreted by a shell and are used to run a set of commands in sequence.

- The number of required nodes, cores, wall time and more can be determined by the parameters in the job script header with "#PBS" before any executable commands in the script.

#!/bin/bash #PBS -N job_name #PBS -l nodes=2:ppn=32 #PBS -l walltime=00:20:00 # Change to the direcotry that the job was submitted from cd $PBS_O_WORKDIR # Launch the parallel job to the allocated compute nodes aprun -n 64 -N 32 ./my_mpi_executable arg1 arg2 > my_output_file 2>&1

- The job is submitted by the qsub command (all script head parameters #PBS can also be submitted directly by qsub command options).

qsub my_batchjob_script.pbs

- Overwriting qsub Options:

qsub -N other_name -l nodes=2:ppn=32,walltime=00:20:00 my_batchjob_script.pbs

- The batch script is not necessarily granted resources immediately, it may sit in the queue of pending jobs for some time before its required resources become available.

- At the end of the execution output and error files are returned to submission directory

- This example will run your executable "my_mpi_executable" in parallel with 64 MPI processes. Torque will allocate 2 nodes to your job for a maximum time of 20 minutes and place 32 processes on each node (one per core). The batch systems allocates nodes exclusively only for one job. After the walltime limit is exceeded, the batch system will terminate your job. The job launcher for the XE6/XC30 parallel jobs (both MPI and OpenMP) is aprun. This needs to be started from a subdirectory of the /mnt/lustre_server (your workspace). The aprun example above will start the parallel executable "my_mpi_executable" with the arguments "arg1" and "arg2". The job will be started using 64 MPI processes with 32 processes placed on each of your allocated nodes (remember that a node consists of 32 cores in the XE6 system and only 16 cores in the XC30 system). You need to have nodes allocated by the batch system before (qsub).

To query further options of aprun, please use

man aprun aprun -h

Interactive Mode

Interactive mode is typically used for debugging or optimizing code but not for running production code. To begin an interactive session, use the "qsub -I" command:

qsub -I -l nodes=2:ppn=32,walltime=00:30:00

If the requested resources are available and free (in the example above: 2 nodes/32 cores, 30 minutes), then you will get a new session on the mom node for your requested resources. Now you have to use the aprun command to launch your application to the allocated compute nodes. When you are finished, enter logout to exit the batch system and return to the normal command line.