- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

CRAY XE6 Graphic Environment: Difference between revisions

No edit summary |

|||

| (32 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== CRAY XE6 Graphic | |||

For graphical pre- and post-processing purposes 3 visualisation nodes have been integrated into the external nodes of the CRAY XE6. The nodes are equipped with a nVIDIA Quadro 6000 with 6GB memory, 32 cores CPU and 128 GB of main memory. Access to a single node is possible by using the node feature ''mem128gb''. | |||

<pre> | |||

user@eslogin001>qsub -I -lnodes=1:mem128gb | |||

</pre> | |||

To use the graphic hardware for remote rendering there are currently two ways tested. | |||

The first is via TurboVNC the second one is directly via VirtualGL. | |||

== VNC Setup == | |||

If you want to use the CRAY XE6 Graphic Hardware e.g. for graphical pre- and post-processing, on way to do it is via a vnc-connection. For that purpose you have to download/install a VNC viewer to/on your local machine. | |||

E.g. TurboVNC comes as a pre-compiled package which can be downloaded from [http://www.virtualgl.org/Downloads/TurboVNC http://www.virtualgl.org/Downloads/TurboVNC]. For Fedora 13 the package tigervnc is available via yum installer. | |||

Other VNC viewers can also be used but we recommend the usage of TurboVNC, an accelerated version of TightVNC | |||

designed for video and 3D applications, since we run the TurboVNC server on the visualisation nodes and the combination of the two components will give the best performance for GL applications. | |||

==== Preparation of CRAY XE6 ==== | |||

Before using vnc for the first time you have to log on to one of the hermit front ends (hermit1.hww.de) and load the module "VirtualGL" | |||

<pre> module load tools/VirtualGL </pre> | |||

Then, you have to make a .vnc directory in your home directory and run | |||

<pre>vncpasswd</pre> | |||

which creates a passwd file in the .vnc directory. | |||

==== Starting the VNC server ==== | |||

To start a VNC session you simply have to login to one of the front ends (hermit1.hww.de) via ssh. | |||

<pre> | |||

user@client>ssh hermit1.hww.de | |||

</pre> | |||

Then load the VirtualGL module to setup the VNC environment and launch the VNC startscript. | |||

<pre> | |||

user@eslogin001>module load tools/VirtualGL | |||

user@eslogin001>vis_via_vnc.sh | |||

</pre> | |||

This will set up a default VNC session with one hour walltime running a TurboVNC server with a resolution of 1240x900. | |||

To control the configuration of the VNC session the vis_via_vnc.sh script has three '''optional''' parameters. | |||

<pre> | |||

vis_via_vnc.sh [walltime] [geometry] [x0vnc] | |||

</pre> | |||

where walltime can be specified in format hh:mm:ss or only hh. | |||

The geometry option sets the resolution of the Xvnc server launched by the TurboVNC session and has to be given in format 1234x1234. | |||

If x0vnc is specified instead of a TurboVNC server with own Xvnc Display a X0-VNC server is started. As its name says this server is directly connected to the :0.0 Display which is contoled by an X-Server always running on the visualisation nodes. A session started with this option will have a maximum resolution of 1152x864 pixels which can not be changed. | |||

There is also a -help option which returns the explanation of the parameters and gives examples for script usage. | |||

The vis_via_vnc.sh script returns the name of the node reserved for you, a display number and the IP-address. | |||

==== VNC viewer with -via option ==== | |||

If you got a VNC viewer which supports the via option like TurboVNC or TigerVNC you can simply call the viewer | |||

like stated below | |||

<pre> | |||

user@client>vncviewer -via hermit1.hww.de <node name>:<Display#> | |||

</pre> | |||

==== VNC viewer without -via option ==== | |||

If you got a VNC viewer without support of the vis option, like JavaVNC or TightVNC for Windows, you have to setup a ssh tunnel via the frontend first and then launch the VNC viewer with a connection to localhost | |||

<pre> | |||

user@client>ssh -N -L10000:<IP-address of vis-node>:5901 & | |||

user@client>vncviewer localhost:10000 | |||

</pre> | |||

Enter the vnc password you set on the frontend and you should get a Gnome session running in your VNC viewer. | |||

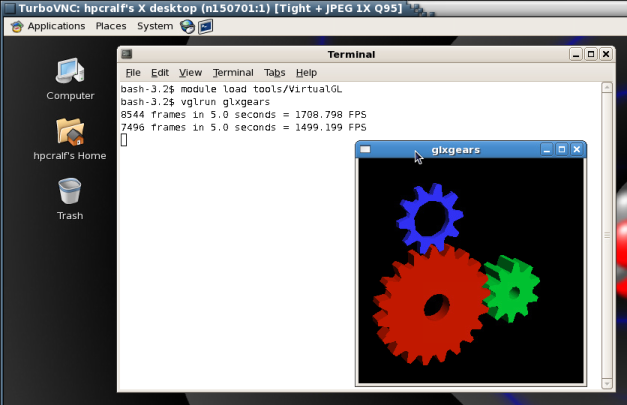

==== GL applications within the VNC session with TurboVNC ==== | |||

To execute 64Bit GL Applications within this session you have to open a shell and again load the VirtualGL module to set the VirtualGL environment and then start the application with the VirtualGL wrapper command | |||

<pre> | |||

vglrun <Application command> | |||

</pre> | |||

[[Image:vgl_in_vnc_session.png]] | |||

==== GL applications within the VNC session with X0-VNC ==== | |||

Since the X0-VNC server is directly reading the frame buffer of the visualisation node's graphic card, GL applications can be directly executed within the VNC session without using any wrapping command. | |||

==== Ending the vnc session ==== | |||

If the vnc session isn't needed any more and the requested walltime isn't expired already you should kill your queue job with | |||

<pre> | |||

user@eslogin001>qdel <jour_job_id> | |||

</pre> | |||

== VirtualGL Setup (WITHOUT turbovnc) == | |||

To use VirtualGL you have to install it on your local client. It is available in the form of pre-compiled packages at [http://www.virtualgl.org/Downloads/VirtualGL http://www.virtualgl.org/Downloads/VirtualGL]. | |||

==== Linux ==== | |||

After the installation of VirtualGL you can connect to cl3fr1.hww.de via the vglconnect command | |||

<pre> | |||

user@client>vglconnect -s hermit1.hww.de | |||

</pre> | |||

Then call the prepare the visualisation queue job and connect to the reserved visualisation node via the vglconnect command | |||

<pre> | |||

user@eslogin001>module load tools/VirtualGL | |||

user@eslogin001>vis_via_vgl.sh XX:XX:XX | |||

user@eslogin001>vglconnect -s vis-node | |||

</pre> | |||

where XX:XX:XX is the walltime required for your visualisation job and vis-node the hostname of the visualisation node reserved for you. | |||

On the visualisation node you can then execute 64Bit GL applications with the VirtualGL wrapper command | |||

<pre> | |||

user@vis-node>module load tools/VirtualGL | |||

user@vis-node>vglrun <Application command> | |||

</pre> | |||

= Visualisation Software = | |||

To use the installed visualisation software please follow the steps described below. | |||

== Covise == | |||

== ParaView == | |||

The visualisation software [http://www.paraview.org ParaView] can currently be used in three different ways: | |||

* Serial execution on a visualisation node | |||

This installation is done from the generic binary distribution provided under [http://www.paraview.org/paraview/resources/software.php http://www.paraview.org/paraview/resources/software.php] | |||

* Parallel execution on a visualisation node | |||

This installations are build from source for the visualisation nodes along with the development fatures. This means user developed plugins can be build for this installations. | |||

The ParaView_DIR which has to be given to cmake has to point to /opt/hlrs/tools/paraview/x.x.x-parallel/lib/cmake/paraview-4.0. Where again x.x.x is the version number of Paraview you wish to build for. | |||

* Parallel execution on the compute nodes | |||

This installations are build from source for the CRAY compute nodes. As there is no graphic hardware available on the compute nodes ParaView is build with software rendering. For that the so called | |||

Mesa 9.2.2 OSMesa Gallium llvmpipe state-tracker software rasterizer is used since according to [http://paraview.org/Wiki/ParaView/ParaView_And_Mesa_3D] it is the preferred Mesa back-end renderer for ParaView and VTK. | |||

To execute ParaView either in serial or parallel on a visualisation node first of all reserve one with one of the procedures explained above. | |||

=== Serial execution on a visualisation node === | |||

Being connected to a visualisation node load the ParaView module and execute the ParaView Client with the vglrun wrapper command | |||

<pre> | |||

user@vis-node>module load tools/paraview/x.x.x | |||

user@vis-node>vglrun paraview | |||

</pre> | |||

where x.x.x is the version number of Paraview you wish to load. The module tools/VirtualGL is loaded along with the paraview module. | |||

Which versions are currently installed can be looked up by executing the command | |||

<pre> | |||

user@vis-node>module avail tools/paraview | |||

</pre> | |||

=== Parallel execution on visualisation nodes pre001 and pre002 === | |||

For the visualisation of bigger data sets a parallel installation of ParaView with OpenGL support is available. Since ParaView itself is a completely serial application, when visualizing data in parallel Paraview has to be used in Server-Client configuration. | |||

Being connected to a visualisation node load the Paraview module | |||

<pre> | |||

user@vis-node>module load tools/paraview/x.x.x-parallel-OpenGL | |||

</pre> | |||

where x.x.x is the version number of Paraview you wish to load. | |||

In current configuration the module switches to ProgEnv-gnu and loads the correct compiler version of gcc. Also the PATH, LIBRARY_PATH and LD_LIBRARY_PATH environment variables are extended to point to the lib and bin paths of the Paraview installation. In addition the VirtualGL module is loaded. | |||

Along with ParaView a version of OpenMPI is installed in the same directory. This is because the CRAY mpi installation is not usable on the vis-nodes as they are bootet with an opensuse installation and are not connected to the Gemini network of the Hermit mainframe. | |||

To run parallel ParaView a parallel pvserver is needed which can be launched with the command | |||

<pre> | |||

mpirun -n xx -mca btl sm,self vglrun pvserver | |||

</pre> | |||

where xx is the number of prcesses to use for the pvserver. Please notice that the server has still to be launched with the vglrun wrapper command. | |||

=== Parallel execution on visualisation nodes pre003 === | |||

On the visualisation node pre003 there is the posebility to launch a X-server driving two graphic cards. For that option please get in contact with the system administrators. | |||

The execution command for the pvserver then has to be: | |||

<pre> | |||

mpirun -n xx -mca btl sm,self vglrun -d :0.0 pvserver : -n xx -mca btl sm,self vglrun -d :0.1 pvserver | |||

</pre> | |||

where xx is the number of prcesses to use for the pvserver. Please notice that the server has still to be launched with the vglrun wrapper command using the option -d which tells VirtualGL on which screen of display :0 to render. | |||

Additionally pre003 can be used with only one graphic card in the same way as described above. | |||

=== Parallel execution on compute nodes === | |||

For the visualisation of large scale data sets a parallel installation of ParaView with the OSMesa software rasterizer as the back-end renderer is available. This installation is '''not''' meant to be executed on the visualisation nodes since it is build against the CRAY-MPI libraries and is not able to perform hardware OpenGL rendering on graphic cards. The rendering is done directly on the CPU into a memory buffer. | |||

To launch a pvserver with OSMesa support first of all allocate an interactive batch job as described [[CRAY_XE6_Using_the_Batch_System#Interactive_Mode | here]]. Please be aware that the main problem with visualizing large scale data sets is the host memory. So be shure to allocate enough compute nodes so that your problem will fit in the accumulated memory. If the paraview server exits with an error message like the one below it is an indication that one of the compute nodes has ran out of memory and that you should use more or bigger compute nodes to fit your problem into the memory. | |||

<pre> | |||

[NID 03629] 2013-12-12 14:43:30 Apid 3551455: initiated application termination | |||

[NID 03628] 2013-12-12 14:43:32 Apid 3551455: OOM killer terminated this process. | |||

[NID 03629] 2013-12-12 14:43:32 Apid 3551455: OOM killer terminated this process. | |||

Application 3551455 exit signals: Killed | |||

Application 3551455 resources: utime ~32s, stime ~77s | |||

</pre> | |||

Once logged in to the interactive batch job find out the ip-address of your mom-node with | |||

<pre> | |||

hostname -i | |||

</pre> | |||

Remember this address you will need it later on. After that load the ParaView Mesa module with | |||

<pre> | |||

module load tools/paraview/x.x.x-parallel-Mesa | |||

</pre> | |||

where x.x.x is the version of ParaView you wish to load. Then launch the pvserver with | |||

<pre> | |||

aprun -n xxx pvserver | |||

</pre> | |||

where xxx is the numer of cores you allocated in your interactive batch job with -lmppwidth=xxx. | |||

If the pvserver is up and running it should respond with a message like the one below. | |||

<pre> | |||

04:01 PM nid03524 ~>aprun -n 128 pvserver | |||

Waiting for client... | |||

Connection URL: cs://nid00961:11111 | |||

Accepting connection(s): nid00961:11111 | |||

</pre> | |||

When the pvserver is running establish a second connection to hermit1.hww.de with X-forwarding activ (ssh option -X) and then connect to the mom-node by means of the above rembered ip-address | |||

<pre> | |||

ssh -X xxx.xxx.xxx.xxx | |||

</pre> | |||

Again load the ParaView module with | |||

<pre> | |||

module load tools/paraview/x.x.x-parallel-Mesa | |||

</pre> | |||

where x.x.x is the version of ParaView you wish to load. Be aware that this version has to be the same as the one of the pvserver you launched since it sets the LD_LIBRARY_PATH environment variable in a way that it fits to the client made for the server version. | |||

In ParaView use the File->Connect...->Add Server command to configure a pvserver. | |||

The parameters from the example above would be: | |||

*Name: My_Server | |||

*Server Type: Client/Server | |||

*Host: nid00961 | |||

*Port: 11111 | |||

Apply your settings by pressing Configure. In the subsequent dialog choose start manual and again apply your settings by pressing Save. Then choose your newly configured server from the list and connect to it by pressing the connect button. | |||

'''Remark:''' In principle it is possible to use any client which has the same version as the server for te connection. In case that user developed plugins should be loaded on client as well as sever side the client has to be especially build for the server since the library environment has to be the same for both parts. | |||

=== Parallel execution on compute nodes with VNC === | |||

If you experience performace issues with the X-forwarding when executing the paraview client on a mom-node it is also possible to connect from a vnc session on a pre node to a pvserver running on the compute nodes. | |||

First start a vnc-session on a visualisation node as decribed above. Within this vnc-session load the ParaView mesa module and execute the launch_pvserver.sh script. | |||

<pre> | |||

module load tools/paraview/x.x.x-parallel-Mesa | |||

launch_pvserver.sh XX:XX:XX mppnppn=nn mppwidth=mm | |||

</pre> | |||

where XX:XX:XX is the walltime in hours:minutes:seconds, nn is the number of processes to start per node and mm is the number of processes to start (like in the options of the qsub command). | |||

After successful startup of the pvserver the script issues a ssh tunnel command. Executing this command connects the local port 11112 to the one of the pvserver running on the compute nodes. | |||

After that you should be able to connect with the paraview client to localhost port 11112. | |||

The parameters in the connect dialog would be: | |||

*Name: My_Server | |||

*Server Type: Client/Server | |||

*Host: localhost | |||

*Port: 11112 | |||

Please note that there is no direct rendering possible on an Xvnc server so you have to execute the paraview client with the vglrun wrapper command. | |||

If the execution of the tunnel command fails due to an occupied local port and since there is no free port detection implemented in the script you should choose another port nummber to forward instead of 11112. | |||

Latest revision as of 13:57, 29 January 2014

For graphical pre- and post-processing purposes 3 visualisation nodes have been integrated into the external nodes of the CRAY XE6. The nodes are equipped with a nVIDIA Quadro 6000 with 6GB memory, 32 cores CPU and 128 GB of main memory. Access to a single node is possible by using the node feature mem128gb.

user@eslogin001>qsub -I -lnodes=1:mem128gb

To use the graphic hardware for remote rendering there are currently two ways tested. The first is via TurboVNC the second one is directly via VirtualGL.

VNC Setup

If you want to use the CRAY XE6 Graphic Hardware e.g. for graphical pre- and post-processing, on way to do it is via a vnc-connection. For that purpose you have to download/install a VNC viewer to/on your local machine.

E.g. TurboVNC comes as a pre-compiled package which can be downloaded from http://www.virtualgl.org/Downloads/TurboVNC. For Fedora 13 the package tigervnc is available via yum installer.

Other VNC viewers can also be used but we recommend the usage of TurboVNC, an accelerated version of TightVNC designed for video and 3D applications, since we run the TurboVNC server on the visualisation nodes and the combination of the two components will give the best performance for GL applications.

Preparation of CRAY XE6

Before using vnc for the first time you have to log on to one of the hermit front ends (hermit1.hww.de) and load the module "VirtualGL"

module load tools/VirtualGL

Then, you have to make a .vnc directory in your home directory and run

vncpasswd

which creates a passwd file in the .vnc directory.

Starting the VNC server

To start a VNC session you simply have to login to one of the front ends (hermit1.hww.de) via ssh.

user@client>ssh hermit1.hww.de

Then load the VirtualGL module to setup the VNC environment and launch the VNC startscript.

user@eslogin001>module load tools/VirtualGL user@eslogin001>vis_via_vnc.sh

This will set up a default VNC session with one hour walltime running a TurboVNC server with a resolution of 1240x900. To control the configuration of the VNC session the vis_via_vnc.sh script has three optional parameters.

vis_via_vnc.sh [walltime] [geometry] [x0vnc]

where walltime can be specified in format hh:mm:ss or only hh.

The geometry option sets the resolution of the Xvnc server launched by the TurboVNC session and has to be given in format 1234x1234.

If x0vnc is specified instead of a TurboVNC server with own Xvnc Display a X0-VNC server is started. As its name says this server is directly connected to the :0.0 Display which is contoled by an X-Server always running on the visualisation nodes. A session started with this option will have a maximum resolution of 1152x864 pixels which can not be changed.

There is also a -help option which returns the explanation of the parameters and gives examples for script usage.

The vis_via_vnc.sh script returns the name of the node reserved for you, a display number and the IP-address.

VNC viewer with -via option

If you got a VNC viewer which supports the via option like TurboVNC or TigerVNC you can simply call the viewer like stated below

user@client>vncviewer -via hermit1.hww.de <node name>:<Display#>

VNC viewer without -via option

If you got a VNC viewer without support of the vis option, like JavaVNC or TightVNC for Windows, you have to setup a ssh tunnel via the frontend first and then launch the VNC viewer with a connection to localhost

user@client>ssh -N -L10000:<IP-address of vis-node>:5901 & user@client>vncviewer localhost:10000

Enter the vnc password you set on the frontend and you should get a Gnome session running in your VNC viewer.

GL applications within the VNC session with TurboVNC

To execute 64Bit GL Applications within this session you have to open a shell and again load the VirtualGL module to set the VirtualGL environment and then start the application with the VirtualGL wrapper command

vglrun <Application command>

GL applications within the VNC session with X0-VNC

Since the X0-VNC server is directly reading the frame buffer of the visualisation node's graphic card, GL applications can be directly executed within the VNC session without using any wrapping command.

Ending the vnc session

If the vnc session isn't needed any more and the requested walltime isn't expired already you should kill your queue job with

user@eslogin001>qdel <jour_job_id>

VirtualGL Setup (WITHOUT turbovnc)

To use VirtualGL you have to install it on your local client. It is available in the form of pre-compiled packages at http://www.virtualgl.org/Downloads/VirtualGL.

Linux

After the installation of VirtualGL you can connect to cl3fr1.hww.de via the vglconnect command

user@client>vglconnect -s hermit1.hww.de

Then call the prepare the visualisation queue job and connect to the reserved visualisation node via the vglconnect command

user@eslogin001>module load tools/VirtualGL user@eslogin001>vis_via_vgl.sh XX:XX:XX user@eslogin001>vglconnect -s vis-node

where XX:XX:XX is the walltime required for your visualisation job and vis-node the hostname of the visualisation node reserved for you.

On the visualisation node you can then execute 64Bit GL applications with the VirtualGL wrapper command

user@vis-node>module load tools/VirtualGL user@vis-node>vglrun <Application command>

Visualisation Software

To use the installed visualisation software please follow the steps described below.

Covise

ParaView

The visualisation software ParaView can currently be used in three different ways:

- Serial execution on a visualisation node

This installation is done from the generic binary distribution provided under http://www.paraview.org/paraview/resources/software.php

- Parallel execution on a visualisation node

This installations are build from source for the visualisation nodes along with the development fatures. This means user developed plugins can be build for this installations. The ParaView_DIR which has to be given to cmake has to point to /opt/hlrs/tools/paraview/x.x.x-parallel/lib/cmake/paraview-4.0. Where again x.x.x is the version number of Paraview you wish to build for.

- Parallel execution on the compute nodes

This installations are build from source for the CRAY compute nodes. As there is no graphic hardware available on the compute nodes ParaView is build with software rendering. For that the so called Mesa 9.2.2 OSMesa Gallium llvmpipe state-tracker software rasterizer is used since according to [1] it is the preferred Mesa back-end renderer for ParaView and VTK.

To execute ParaView either in serial or parallel on a visualisation node first of all reserve one with one of the procedures explained above.

Serial execution on a visualisation node

Being connected to a visualisation node load the ParaView module and execute the ParaView Client with the vglrun wrapper command

user@vis-node>module load tools/paraview/x.x.x user@vis-node>vglrun paraview

where x.x.x is the version number of Paraview you wish to load. The module tools/VirtualGL is loaded along with the paraview module.

Which versions are currently installed can be looked up by executing the command

user@vis-node>module avail tools/paraview

Parallel execution on visualisation nodes pre001 and pre002

For the visualisation of bigger data sets a parallel installation of ParaView with OpenGL support is available. Since ParaView itself is a completely serial application, when visualizing data in parallel Paraview has to be used in Server-Client configuration.

Being connected to a visualisation node load the Paraview module

user@vis-node>module load tools/paraview/x.x.x-parallel-OpenGL

where x.x.x is the version number of Paraview you wish to load.

In current configuration the module switches to ProgEnv-gnu and loads the correct compiler version of gcc. Also the PATH, LIBRARY_PATH and LD_LIBRARY_PATH environment variables are extended to point to the lib and bin paths of the Paraview installation. In addition the VirtualGL module is loaded.

Along with ParaView a version of OpenMPI is installed in the same directory. This is because the CRAY mpi installation is not usable on the vis-nodes as they are bootet with an opensuse installation and are not connected to the Gemini network of the Hermit mainframe.

To run parallel ParaView a parallel pvserver is needed which can be launched with the command

mpirun -n xx -mca btl sm,self vglrun pvserver

where xx is the number of prcesses to use for the pvserver. Please notice that the server has still to be launched with the vglrun wrapper command.

Parallel execution on visualisation nodes pre003

On the visualisation node pre003 there is the posebility to launch a X-server driving two graphic cards. For that option please get in contact with the system administrators. The execution command for the pvserver then has to be:

mpirun -n xx -mca btl sm,self vglrun -d :0.0 pvserver : -n xx -mca btl sm,self vglrun -d :0.1 pvserver

where xx is the number of prcesses to use for the pvserver. Please notice that the server has still to be launched with the vglrun wrapper command using the option -d which tells VirtualGL on which screen of display :0 to render.

Additionally pre003 can be used with only one graphic card in the same way as described above.

Parallel execution on compute nodes

For the visualisation of large scale data sets a parallel installation of ParaView with the OSMesa software rasterizer as the back-end renderer is available. This installation is not meant to be executed on the visualisation nodes since it is build against the CRAY-MPI libraries and is not able to perform hardware OpenGL rendering on graphic cards. The rendering is done directly on the CPU into a memory buffer.

To launch a pvserver with OSMesa support first of all allocate an interactive batch job as described here. Please be aware that the main problem with visualizing large scale data sets is the host memory. So be shure to allocate enough compute nodes so that your problem will fit in the accumulated memory. If the paraview server exits with an error message like the one below it is an indication that one of the compute nodes has ran out of memory and that you should use more or bigger compute nodes to fit your problem into the memory.

[NID 03629] 2013-12-12 14:43:30 Apid 3551455: initiated application termination [NID 03628] 2013-12-12 14:43:32 Apid 3551455: OOM killer terminated this process. [NID 03629] 2013-12-12 14:43:32 Apid 3551455: OOM killer terminated this process. Application 3551455 exit signals: Killed Application 3551455 resources: utime ~32s, stime ~77s

Once logged in to the interactive batch job find out the ip-address of your mom-node with

hostname -i

Remember this address you will need it later on. After that load the ParaView Mesa module with

module load tools/paraview/x.x.x-parallel-Mesa

where x.x.x is the version of ParaView you wish to load. Then launch the pvserver with

aprun -n xxx pvserver

where xxx is the numer of cores you allocated in your interactive batch job with -lmppwidth=xxx.

If the pvserver is up and running it should respond with a message like the one below.

04:01 PM nid03524 ~>aprun -n 128 pvserver Waiting for client... Connection URL: cs://nid00961:11111 Accepting connection(s): nid00961:11111

When the pvserver is running establish a second connection to hermit1.hww.de with X-forwarding activ (ssh option -X) and then connect to the mom-node by means of the above rembered ip-address

ssh -X xxx.xxx.xxx.xxx

Again load the ParaView module with

module load tools/paraview/x.x.x-parallel-Mesa

where x.x.x is the version of ParaView you wish to load. Be aware that this version has to be the same as the one of the pvserver you launched since it sets the LD_LIBRARY_PATH environment variable in a way that it fits to the client made for the server version.

In ParaView use the File->Connect...->Add Server command to configure a pvserver.

The parameters from the example above would be:

- Name: My_Server

- Server Type: Client/Server

- Host: nid00961

- Port: 11111

Apply your settings by pressing Configure. In the subsequent dialog choose start manual and again apply your settings by pressing Save. Then choose your newly configured server from the list and connect to it by pressing the connect button.

Remark: In principle it is possible to use any client which has the same version as the server for te connection. In case that user developed plugins should be loaded on client as well as sever side the client has to be especially build for the server since the library environment has to be the same for both parts.

Parallel execution on compute nodes with VNC

If you experience performace issues with the X-forwarding when executing the paraview client on a mom-node it is also possible to connect from a vnc session on a pre node to a pvserver running on the compute nodes.

First start a vnc-session on a visualisation node as decribed above. Within this vnc-session load the ParaView mesa module and execute the launch_pvserver.sh script.

module load tools/paraview/x.x.x-parallel-Mesa launch_pvserver.sh XX:XX:XX mppnppn=nn mppwidth=mm

where XX:XX:XX is the walltime in hours:minutes:seconds, nn is the number of processes to start per node and mm is the number of processes to start (like in the options of the qsub command).

After successful startup of the pvserver the script issues a ssh tunnel command. Executing this command connects the local port 11112 to the one of the pvserver running on the compute nodes. After that you should be able to connect with the paraview client to localhost port 11112.

The parameters in the connect dialog would be:

- Name: My_Server

- Server Type: Client/Server

- Host: localhost

- Port: 11112

Please note that there is no direct rendering possible on an Xvnc server so you have to execute the paraview client with the vglrun wrapper command.

If the execution of the tunnel command fails due to an occupied local port and since there is no free port detection implemented in the script you should choose another port nummber to forward instead of 11112.