- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

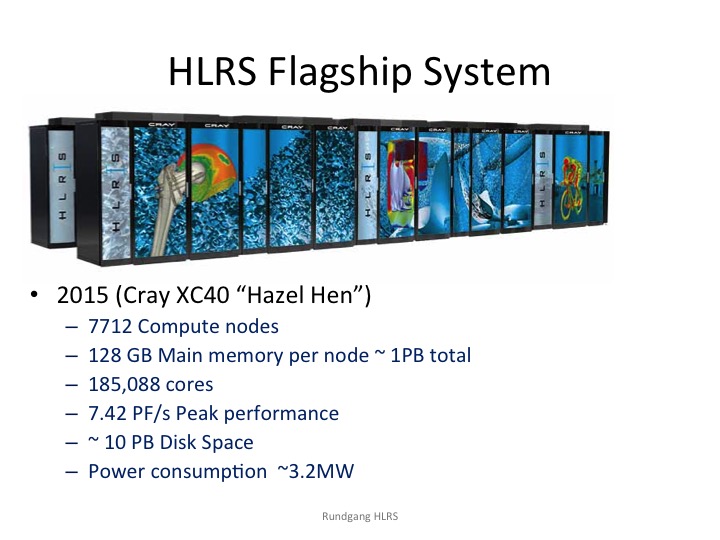

CRAY XC40 Hardware and Architecture

Hazelhen production system

Summary Hazelhen Production system

| Cray Cascade XC40 Supercomputer | Step 2 |

|---|---|

| Performance | 7.4 Pflops |

| Cray Cascade Cabinets | 41 |

| Number of Compute Nodes | 7712 (dual socket) |

Compute Processors

|

7712*2= 15424 Intel Haswell E5-2680v3 2,5 GHz, 12 Cores, 2 HT/Core |

Compute Memory on Scalar Processors

|

DDR4-2133 registered ECC |

| Interconnect | Cray Aries |

| Service Nodes (I/O and Network) | 90 |

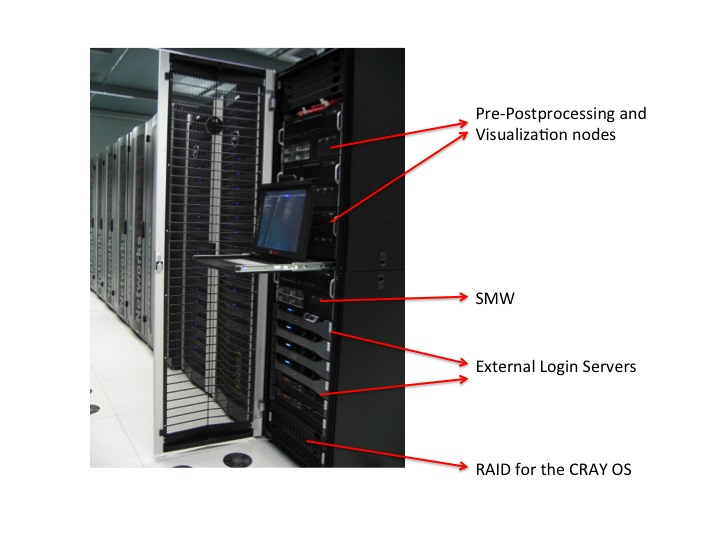

| External Login Servers | 10 |

| Pre- and Post-Processing Servers | 3 Cray CS300: each with 4x Intel(R) Xeon(R) CPU E5-4620 v2 @ 2.60GHz (Ivy Bridge), 32 cores, 512 GB DDR3 Memory (PC3-14900R), 7,1TB scratch disk space (4x ~2TB RAID0), NVidia Quadro K6000 (12 GB GDDR5), single job usage

|

User Storage

|

~10 PB |

Cray Linux Environment (CLE)

|

Yes |

| PGI Compiling Suite (FORTRAN, C, C++) including Accelerator | 25 user (shared with Step 1) |

Cray Developer Toolkit

|

Unlimited Users |

Cray Programming Environment

|

Unlimited Users |

| Alinea DDT Debugger | 2048 Processes (shared with Step 1) |

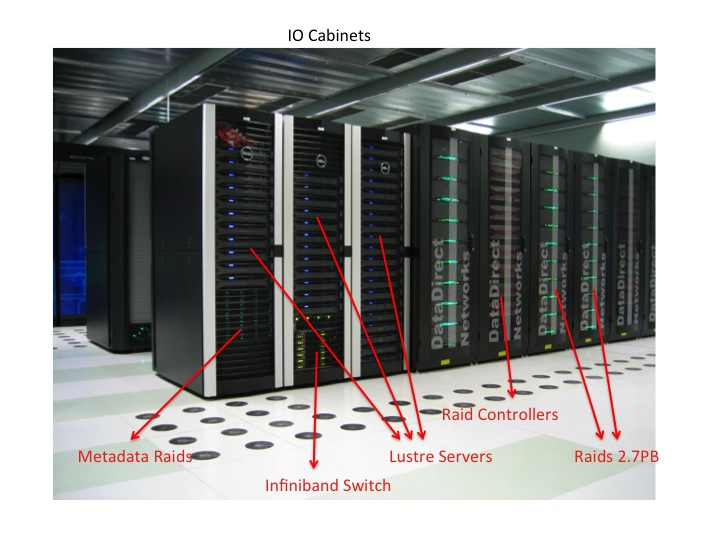

| Lustre Parallel Filesystem | Licensed on all Sockets |

Intel Composer XE

|

10 Seats |

For detailed information see XC40-Intro

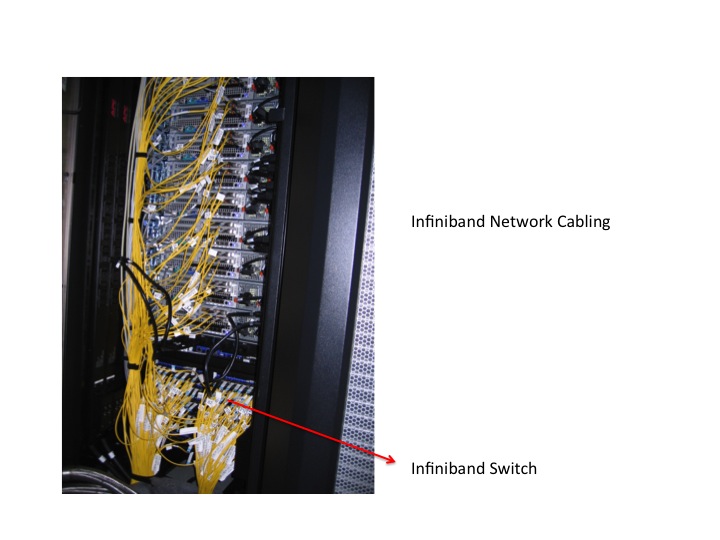

For information on the Aries network see Communication_on_Cray_XC40_Aries_network

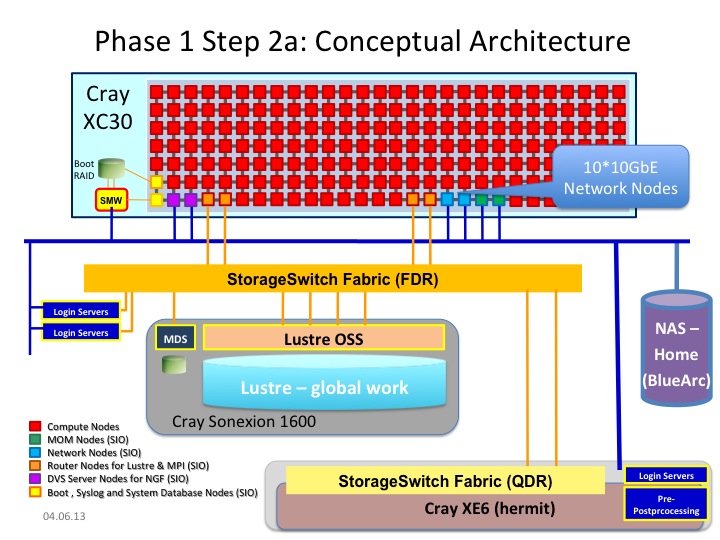

Architecture

- System Management Workstation (SMW)

- system administrator's console for managing a Cray system like monitoring, installing/upgrading software, controls the hardware, starting and stopping the XC40 system.

- service nodes are classified in:

- login nodes for users to access the system

- boot nodes which provides the OS for all other nodes, licenses,...

- network nodes which provides e.g. external network connections for the compute nodes

- Cray Data Virtualization Service (DVS): is an I/O forwarding service that can parallelize the I/O transactions of an underlying POSIX-compliant file system.

- sdb node for services like ALPS, torque, moab, slurm, cray management services,...

- I/O nodes for e.g. lustre

- MOM nodes for placing user jobs of the batch system in to execution

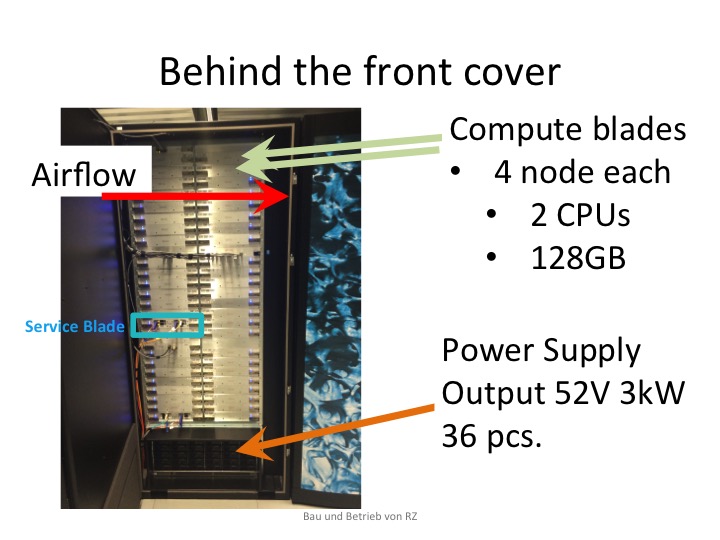

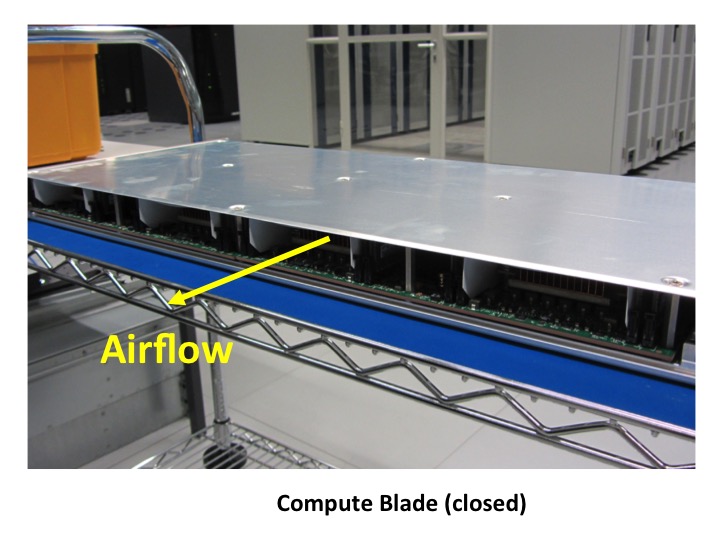

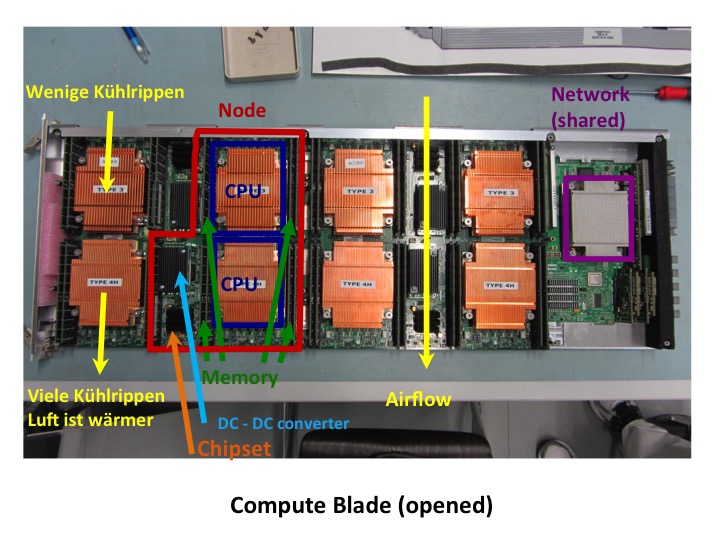

- compute nodes

- are only available for user using the batch system and the Application Level Placement Scheduler (ALPS), see running applications.

- The compute nodes are installed with 128 GB memory, each with fast interconnect (CRAY Aries).

- Details about the interconnect of the Cray XC series network and Communication_on_Cray_XC40_Aries_network

- are only available for user using the batch system and the Application Level Placement Scheduler (ALPS), see running applications.

- in future, the StorageSwitch Fabric of step2a and step1 will be connected. So, the Lustre workspace filesystems can be used on both hardware (Login servers and preprocessing servers) of step1 and step2a.