- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

CAE howtos: Difference between revisions

(initial version) |

No edit summary |

||

| (19 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

= First steps = | |||

The first steps for [[Vulcan]] and [[HPE_Hawk|Hawk]] are quite similar and shown for the latter here. | |||

To work on a remote system a full workflow (including data transfers) has to be developed and one part of it is on the user side and user-specific. | |||

== Login == | |||

[[HPE_Hawk_access|Login]] to a frontend through [[Secure_Shell_ssh|ssh]], e.g. | |||

ssh -X user@hawk.hww.hlrs.de | |||

The transfer of files will also be done using ssh. | |||

(Windows users might e.g. use the <q>ssh</q> and <q>scp</q> command from the [https://learn.microsoft.com/en-us/windows/wsl/ Windows Subsystem for Linux] or [https://www.cygwin.com/ Cygwin] as well as utilities like [https://www.chiark.greenend.org.uk/~sgtatham/putty/ PuTTY] (<q>pscp</q>) or [https://winscp.net/de WinSCP]) | |||

== Workspaces == | |||

Simulation data (input as well as output) should be stored in [[Workspace_mechanism|Workspaces]]. | |||

A workspace is a directory, which is created for a user on a suitable file system and provided for a limited time. | |||

There's usually a (group) quota set for the data volume and the maximum number of files, which can be checked with <q>ws_quota</q>. | |||

Creating e.g. a <q>test</q> workspace for 1 day can be done with | |||

ws_allocate test 1 | |||

After that time the directory will be moved and unaccessible by the user (but still counts for the quota) | |||

to be completely removed in a second stage. (Use <q>ws_restore -l</q> to check which workspaces still could be restored.) | |||

To better find workspace directories remotely with GUI-based utilities | |||

(like e.g. [https://filezilla-project.org/ Filezilla], where the <q>ws_*</q> commands can't be executed), | |||

links can be automatically generated e.g. in a extra directory in $HOME created for that purpose: | |||

mkdir ~/ws.Hawk | |||

ws_register ~/ws.Hawk | |||

(the tilde, as well as $HOME, represents the HOME-directory) | |||

<q>ws_register</q> has to be repeatedly executed after creation/allocation or deletion/release of workspaces. | |||

To list all workspaces with the remaining time use | |||

ws_list | |||

Change the current directory to the <q>test</q> workspace directory and list the content e.g. with | |||

cd `ws_find test` | |||

pwd | |||

ls -al | |||

(The <q>ws_find</q> command in the backticks determines the workspace directory.) | |||

== Modules == | |||

The [[Module_environment(Hawk)|Module environment system]] adapts the environment to use certain software. | |||

module avail | |||

shows the available modules, which can be <q>load</q>ed to be used and <q>unload</q>ed afterwards. | |||

== Requesting ressources == | |||

The ressources are managed by the PBSpro [[Batch_System_PBSPro_(Hawk)|Batch System]]. | |||

qsub -IXlselect=2:mpiprocs=8,walltime=0:10:00 -q test | |||

will ask for an interactive (-I) job (-X to enable X-forwarding) | |||

requesting 2 nodes for 10 minutes in the test queue | |||

to start a MPI program with 2*8 processes. | |||

After some waiting time a shell, running on the first node will open with a prompt. | |||

module load openmpi | |||

module load cae | |||

mpirun -np 16 showaffinity.openmpi -p hawk0 | |||

runs the <q>showaffinity</q> test program (which needs the cae module) to display the pinning. | |||

qwtime | |||

(which also needs cae) shows the remaining time and | |||

exit | |||

will terminate the interactive job, which usually will be used for testing and debugging purposes. | |||

(Only the time really used for the job will be accounted, not the requested walltime.) | |||

Production jobs typically use job scripts (e.g. bash scripts), which contain the commands to be executed. | |||

(utilities like [[CAE_utilities#qgen|qgen]] can be used to automatically create these job scripts for certain tasks) | |||

<q>qsub</q> will be executed without the <q>-I</q> Option, but a the job script as a positional argument instead. | |||

=Licensing= | =Licensing= | ||

| Line 9: | Line 96: | ||

=== Setup === | === Setup === | ||

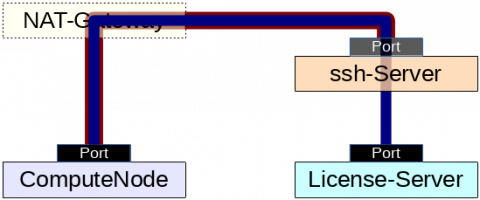

[[File:Lizenz-ssh-Tunnel.png|center|480px|ssh tunnel for license server]] | |||

==== application node (compute node) ==== | |||

the node where the license is drawn | |||

==== ssh server ==== | |||

a proxy between the application node and the license server | |||

* The ssh server has to be accessible from the application node (maybe through a NAT-gateway) and the license server has to be accessible by the ssh server. Thus there mustn't be a firewall to prevent the connections. However the ssh server firewall only has to enable a connection to the application node and the license server port (and probably an administration computer or internal network). | |||

* The sshd configuration has to enable "AllowTcpForwarding yes" (instead of port 22 also an alternative port might be used). | |||

* The ssh server user does not need a login-shell to just establish a ssh tunnel (''/bin/false'' is enough), but | |||

* a passwordless access is needed to automize the setup of a ssh tunnel from a job script. | |||

==== license server ==== | |||

the node a license is served | |||

=== Job script example excerpt === | === Job script example excerpt === | ||

export LICSERVER= | # specify license server and port (using a TCP connection) | ||

export LICSERVER_PORT= | export LICSERVER=licserver.mydomain.de # license server | ||

export LICSERVER_PORT=12345 # license server port (use vendor daemon port for flexnet) | |||

echo -e "license server:\t ${LICSERVER}:${LICSERVER_PORT}" | echo -e "license server:\t ${LICSERVER}:${LICSERVER_PORT}" | ||

export LICSERVERlocal=localhost # local license server | export LICSERVERlocal=localhost # local license server | ||

#export LICSERVERlocal=`hostname` # needs ssh \* binding address | #export LICSERVERlocal=`hostname` # needs ssh \* binding address | ||

export LICSERVERlocal_PORT=${LICSERVER_PORT:-12345} # local license port | export LICSERVERlocal_PORT=${LICSERVER_PORT:-12345} # local license port | ||

echo -e "local license ssh tunnel end:\t${LICSERVERlocal}:${LICSERVERlocal_PORT}" | echo -e "local license ssh tunnel end:\t${LICSERVERlocal}:${LICSERVERlocal_PORT}" | ||

SSH_userserver="user@sshserver.mydomain.de" # passwordless ssh access needed! | |||

SSH_PORT=22 | |||

echo "[`date +%Y-%m-%dT%H:%M:%S`] setting up ssh tunnel through ${ | SSH_ctrlsocket="sshtunnelCtrlSocket.${PBS_JOBID}" | ||

#rm - | echo "[`date +%Y-%m-%dT%H:%M:%S`] setting up ssh tunnel through ${SSH_userserver} (control socket: ${SSH_ctrlsocket})" | ||

ssh -MS "${ | #rm -f "${SSH_ctrlsocket}" # removing socket file should not be necessary | ||

ssh -S "${ | # establish ssh tunnel (might add additional options like e.g. -o ServerAliveInterval=60 or -o TCPKeepAlive=yes) | ||

ssh -MS "${SSH_ctrlsocket}" -fNTL ${LICSERVERlocal_PORT}:${LICSERVER}:${LICSERVER_PORT} -p ${SSH_PORT} ${SSH_userserver} | |||

# check ssh tunnel | |||

ssh -S "${SSH_ctrlsocket}" -O check ${SSH_userserver} || (echo "ssh CTRL socket ${SSH_ctrlsocket} check failed - wait some more time..."; sleep 10) | |||

## adjusting license server environment variables to the ssh tunnel end | ## adjusting license server environment variables to the ssh tunnel end | ||

#export LM_LICENSE_FILE="${LICSERVERlocal_PORT}@${ | # e.g. flexnet (using vendor daemon port) | ||

export LM_LICENSE_FILE="${LICSERVERlocal_PORT}@${LICSERVERlocal}" | |||

nc -zvw4 ${LICSERVERlocal} ${LICSERVERlocal_PORT} # | echo "[`date +%Y-%m-%dT%H:%M:%S`] licensing redirected to ${LM_LICENSE_FILE}" | ||

# alternative check of connection (output redirected to stderr) | |||

nc -zvw4 ${LICSERVERlocal} ${LICSERVERlocal_PORT} 1>&2 | |||

# alternatives, e.g.: | |||

##nmap --system-dns -PN -p${LICSERVERlocal_PORT} ${LICSERVERlocal} | |||

if [ $? -ne 0 ]; then | |||

echo "ERROR reaching ${LICSERVERlocal}:${LICSERVERlocal_PORT}" | |||

else | |||

echo "test connection to ${LICSERVERlocal}:${LICSERVERlocal_PORT} succeeded" | |||

fi | |||

# | # | ||

# start simulation | # start simulation... | ||

# | # | ||

ssh -S "${ | # close connection | ||

ssh -S "${SSH_ctrlsocket}" -O exit ${SSH_userserver} | |||

=== Improvements === | |||

* replacing ssh with [https://www.harding.motd.ca/autossh/ autossh] to automatically restart the ssh-connection if necessary and improve resiliency | |||

=== Connectivity checks === | |||

There might be firewalls, which block a direct connection. | |||

To check, if a connection can be established, some checks might be performed | |||

(e.g. from a frontend or within an interactive session) | |||

* check if port can be reached | |||

nc -zvw4 <SERVER> <PORT> | |||

nmap --system-dns -PN -p <PORT> ${SERVER} | |||

(nmap is not available on HLRS/HWW systems at the moment.) | |||

* check how far we get (assuming TCP connection) | |||

traceroute -T -p <PORT> <SERVER> | |||

(traceroute is disabled on HLRS/HWW systems at the moment.) | |||

For UDP also tracepath might be used. | |||

* check external IP address | |||

The IP address "seen" from outside might be different than the internal one. | |||

Check e.g. | |||

https://websrv.hlrs.de/ipinfo | |||

=Jobscipts= | |||

== Self-initiate termination & more == | |||

PBSpro (and other batch systems) send a SIGTERM to the executed jobscript at the end of the job walltime. | |||

However the time before the job termination might be too short and thus taking care of this within the | |||

jobscript itself is a more flexible alternative. First the time to wait will be calculated | |||

(assuming running a bash jobscript in the example here): | |||

timebeforeend=$(( 5*60 )) # 5 min | |||

module load cae | |||

jobremainingwalltime=$(qwtime -r) | |||

remaintingwalltime2stop=$(( jobremainingwalltime-timebeforeend )) | |||

=== Send SIGTERM to command after some time === | |||

If the command is known e.g. killall can send a SIGTERM: | |||

cmd="path/mycommand" | |||

(sleep ${remaintingwalltime2stop}; killall "${cmd}" ) & # start a subshell in the background which will sleep first | |||

$cmd $options # also with e.g. mpirun | |||

=== LS-Dyna === | |||

<span id="LSDYNAjobterminate"></span> | |||

LS-Dyna checks the existence and content of a file d3kil, | |||

which makes it possible to trigger a program termination: | |||

# LS-Dyna sense switches | |||

##Type Response | |||

# SW1. A restart file is written and LS-DYNA terminates. | |||

# SW2. LS-DYNA responds with time and cycle numbers. | |||

# SW3. A restart file is written and LS-DYNA continues. | |||

# SW4. A plot state is written and LS-DYNA continues. | |||

# SW5. Enter interactive graphics phase and real time visualization. | |||

# SW7. Turn off real time visualization. | |||

# SW8. Interactive 2D rezoner for solid elements and real time visualization. | |||

# SW9. Turn off real time visualization (for option SW8). | |||

# SWA. Flush ASCII file buffers. | |||

# lprint Enable/Disable printing of equation solver memory, cpu requirements. | |||

# nlprint Enable/Disable printing of nonlinear equilibrium iteration information. | |||

# iter Enable/Disable output of binary plot database "d3iter" showing mesh after each equilibrium iteration. Useful for debugging convergence problems. | |||

# conv Temporarily override nonlinear convergence tolerances. | |||

# stop Halt execution immediately, closing open files. | |||

## | |||

dumpsenseswitch='SW1' | |||

# see above for the definition of remaintingwalltime2stop | |||

(sleep ${remaintingwalltime2stop}; echo ${dumpsenseswitch} >d3kil ) & | |||

# LS-Dyna will be executed afterwards | |||

=== Check free memory === | |||

The same technique can also be used to check the free memory after a initial waiting time of 10sec periodically every minute, | |||

saving the results in an file within the actual directory e.g. with | |||

(sleep 10; freeavail.sh --periodic 60:`qwtime -r` -n `qjobnodes.sh -n` > "$PWD/freeavail_${PBS_JOBNAME%.*}.${PBS_JOBID%%.*}") & | |||

before starting the program. | |||

=ISV codes= | |||

* also see [[ISV_Usage]] | |||

= OpenFOAM® = | |||

Your job script is launched via qsub and holds every information for your complete job. | |||

An example, for e.g. pimpleFoam, is given below, the job script name is job.pbs: | |||

#!/bin/bash | |||

#PBS -N myjobname | |||

#PBS -l select=3:node_type=rome:mpiprocs=128 | |||

#PBS -l walltime=00:20:00 | |||

set -e | |||

# Change to the direcotry that the job was submitted from | |||

cd $PBS_O_WORKDIR | |||

module load openfoam/2312-int32 | |||

# rome-scripts for easy omplace usage: | |||

module load rome-scripts | |||

timestamp=$(date +"%Y%m%d-%H%M") | |||

###using 3 nodes with 96 of 128 cores used per node with pinning and correct displacemt. resulting in 288 mpiprocs | |||

mpirun -np 288 omplace -c `distribute_by_fraction.py 96 128` pimpleFoam -parallel > "${timestamp}_log_pimpleFoam.txt" 2>&1 | |||

# using 3 nodes 384 mpiprocs one would use: | |||

# mpirun -np 384 pimpleFoam -parallel > "${timestamp}_log_pimpleFoam.txt" 2>&1 | |||

Several versions are available and can be listed via the following command: | |||

module avail foam | |||

Latest revision as of 09:14, 5 July 2024

First steps

The first steps for Vulcan and Hawk are quite similar and shown for the latter here. To work on a remote system a full workflow (including data transfers) has to be developed and one part of it is on the user side and user-specific.

Login

Login to a frontend through ssh, e.g.

ssh -X user@hawk.hww.hlrs.de

The transfer of files will also be done using ssh.

(Windows users might e.g. use the ssh

and scp

command from the Windows Subsystem for Linux or Cygwin as well as utilities like PuTTY (pscp

) or WinSCP)

Workspaces

Simulation data (input as well as output) should be stored in Workspaces.

A workspace is a directory, which is created for a user on a suitable file system and provided for a limited time.

There's usually a (group) quota set for the data volume and the maximum number of files, which can be checked with ws_quota

.

Creating e.g. a test

workspace for 1 day can be done with

ws_allocate test 1

After that time the directory will be moved and unaccessible by the user (but still counts for the quota)

to be completely removed in a second stage. (Use ws_restore -l

to check which workspaces still could be restored.)

To better find workspace directories remotely with GUI-based utilities

(like e.g. Filezilla, where the ws_*

commands can't be executed),

links can be automatically generated e.g. in a extra directory in $HOME created for that purpose:

mkdir ~/ws.Hawk ws_register ~/ws.Hawk

(the tilde, as well as $HOME, represents the HOME-directory)

ws_register

has to be repeatedly executed after creation/allocation or deletion/release of workspaces.

To list all workspaces with the remaining time use

ws_list

Change the current directory to the test

workspace directory and list the content e.g. with

cd `ws_find test` pwd ls -al

(The ws_find

command in the backticks determines the workspace directory.)

Modules

The Module environment system adapts the environment to use certain software.

module avail

shows the available modules, which can be load

ed to be used and unload

ed afterwards.

Requesting ressources

The ressources are managed by the PBSpro Batch System.

qsub -IXlselect=2:mpiprocs=8,walltime=0:10:00 -q test

will ask for an interactive (-I) job (-X to enable X-forwarding) requesting 2 nodes for 10 minutes in the test queue to start a MPI program with 2*8 processes. After some waiting time a shell, running on the first node will open with a prompt.

module load openmpi module load cae mpirun -np 16 showaffinity.openmpi -p hawk0

runs the showaffinity

test program (which needs the cae module) to display the pinning.

qwtime

(which also needs cae) shows the remaining time and

exit

will terminate the interactive job, which usually will be used for testing and debugging purposes. (Only the time really used for the job will be accounted, not the requested walltime.)

Production jobs typically use job scripts (e.g. bash scripts), which contain the commands to be executed.

(utilities like qgen can be used to automatically create these job scripts for certain tasks)

qsub

will be executed without the -I

Option, but a the job script as a positional argument instead.

Licensing

ssh-Tunnel

To use a remote license server, a ssh-Tunnel can be used. If a ssh-Tunnel connects a local compute node TCP port with the port the license server listens to, the license can be checked out through the local port.

Setup

application node (compute node)

the node where the license is drawn

ssh server

a proxy between the application node and the license server

- The ssh server has to be accessible from the application node (maybe through a NAT-gateway) and the license server has to be accessible by the ssh server. Thus there mustn't be a firewall to prevent the connections. However the ssh server firewall only has to enable a connection to the application node and the license server port (and probably an administration computer or internal network).

- The sshd configuration has to enable "AllowTcpForwarding yes" (instead of port 22 also an alternative port might be used).

- The ssh server user does not need a login-shell to just establish a ssh tunnel (/bin/false is enough), but

- a passwordless access is needed to automize the setup of a ssh tunnel from a job script.

license server

the node a license is served

Job script example excerpt

# specify license server and port (using a TCP connection)

export LICSERVER=licserver.mydomain.de # license server

export LICSERVER_PORT=12345 # license server port (use vendor daemon port for flexnet)

echo -e "license server:\t ${LICSERVER}:${LICSERVER_PORT}"

export LICSERVERlocal=localhost # local license server

#export LICSERVERlocal=`hostname` # needs ssh \* binding address

export LICSERVERlocal_PORT=${LICSERVER_PORT:-12345} # local license port

echo -e "local license ssh tunnel end:\t${LICSERVERlocal}:${LICSERVERlocal_PORT}"

SSH_userserver="user@sshserver.mydomain.de" # passwordless ssh access needed!

SSH_PORT=22

SSH_ctrlsocket="sshtunnelCtrlSocket.${PBS_JOBID}"

echo "[`date +%Y-%m-%dT%H:%M:%S`] setting up ssh tunnel through ${SSH_userserver} (control socket: ${SSH_ctrlsocket})"

#rm -f "${SSH_ctrlsocket}" # removing socket file should not be necessary

# establish ssh tunnel (might add additional options like e.g. -o ServerAliveInterval=60 or -o TCPKeepAlive=yes)

ssh -MS "${SSH_ctrlsocket}" -fNTL ${LICSERVERlocal_PORT}:${LICSERVER}:${LICSERVER_PORT} -p ${SSH_PORT} ${SSH_userserver}

# check ssh tunnel

ssh -S "${SSH_ctrlsocket}" -O check ${SSH_userserver} || (echo "ssh CTRL socket ${SSH_ctrlsocket} check failed - wait some more time..."; sleep 10)

## adjusting license server environment variables to the ssh tunnel end

# e.g. flexnet (using vendor daemon port)

export LM_LICENSE_FILE="${LICSERVERlocal_PORT}@${LICSERVERlocal}"

echo "[`date +%Y-%m-%dT%H:%M:%S`] licensing redirected to ${LM_LICENSE_FILE}"

# alternative check of connection (output redirected to stderr)

nc -zvw4 ${LICSERVERlocal} ${LICSERVERlocal_PORT} 1>&2

# alternatives, e.g.:

##nmap --system-dns -PN -p${LICSERVERlocal_PORT} ${LICSERVERlocal}

if [ $? -ne 0 ]; then

echo "ERROR reaching ${LICSERVERlocal}:${LICSERVERlocal_PORT}"

else

echo "test connection to ${LICSERVERlocal}:${LICSERVERlocal_PORT} succeeded"

fi

#

# start simulation...

#

# close connection

ssh -S "${SSH_ctrlsocket}" -O exit ${SSH_userserver}

Improvements

- replacing ssh with autossh to automatically restart the ssh-connection if necessary and improve resiliency

Connectivity checks

There might be firewalls, which block a direct connection. To check, if a connection can be established, some checks might be performed (e.g. from a frontend or within an interactive session)

- check if port can be reached

nc -zvw4 <SERVER> <PORT>

nmap --system-dns -PN -p <PORT> ${SERVER}

(nmap is not available on HLRS/HWW systems at the moment.)

- check how far we get (assuming TCP connection)

traceroute -T -p <PORT> <SERVER>

(traceroute is disabled on HLRS/HWW systems at the moment.)

For UDP also tracepath might be used.

- check external IP address

The IP address "seen" from outside might be different than the internal one. Check e.g.

https://websrv.hlrs.de/ipinfo

Jobscipts

Self-initiate termination & more

PBSpro (and other batch systems) send a SIGTERM to the executed jobscript at the end of the job walltime. However the time before the job termination might be too short and thus taking care of this within the jobscript itself is a more flexible alternative. First the time to wait will be calculated (assuming running a bash jobscript in the example here):

timebeforeend=$(( 5*60 )) # 5 min module load cae jobremainingwalltime=$(qwtime -r) remaintingwalltime2stop=$(( jobremainingwalltime-timebeforeend ))

Send SIGTERM to command after some time

If the command is known e.g. killall can send a SIGTERM:

cmd="path/mycommand"

(sleep ${remaintingwalltime2stop}; killall "${cmd}" ) & # start a subshell in the background which will sleep first

$cmd $options # also with e.g. mpirun

LS-Dyna

LS-Dyna checks the existence and content of a file d3kil, which makes it possible to trigger a program termination:

# LS-Dyna sense switches

##Type Response

# SW1. A restart file is written and LS-DYNA terminates.

# SW2. LS-DYNA responds with time and cycle numbers.

# SW3. A restart file is written and LS-DYNA continues.

# SW4. A plot state is written and LS-DYNA continues.

# SW5. Enter interactive graphics phase and real time visualization.

# SW7. Turn off real time visualization.

# SW8. Interactive 2D rezoner for solid elements and real time visualization.

# SW9. Turn off real time visualization (for option SW8).

# SWA. Flush ASCII file buffers.

# lprint Enable/Disable printing of equation solver memory, cpu requirements.

# nlprint Enable/Disable printing of nonlinear equilibrium iteration information.

# iter Enable/Disable output of binary plot database "d3iter" showing mesh after each equilibrium iteration. Useful for debugging convergence problems.

# conv Temporarily override nonlinear convergence tolerances.

# stop Halt execution immediately, closing open files.

##

dumpsenseswitch='SW1'

# see above for the definition of remaintingwalltime2stop

(sleep ${remaintingwalltime2stop}; echo ${dumpsenseswitch} >d3kil ) &

# LS-Dyna will be executed afterwards

Check free memory

The same technique can also be used to check the free memory after a initial waiting time of 10sec periodically every minute, saving the results in an file within the actual directory e.g. with

(sleep 10; freeavail.sh --periodic 60:`qwtime -r` -n `qjobnodes.sh -n` > "$PWD/freeavail_${PBS_JOBNAME%.*}.${PBS_JOBID%%.*}") &

before starting the program.

ISV codes

- also see ISV_Usage

OpenFOAM®

Your job script is launched via qsub and holds every information for your complete job. An example, for e.g. pimpleFoam, is given below, the job script name is job.pbs:

#!/bin/bash

#PBS -N myjobname

#PBS -l select=3:node_type=rome:mpiprocs=128

#PBS -l walltime=00:20:00

set -e

# Change to the direcotry that the job was submitted from

cd $PBS_O_WORKDIR

module load openfoam/2312-int32

# rome-scripts for easy omplace usage:

module load rome-scripts

timestamp=$(date +"%Y%m%d-%H%M")

###using 3 nodes with 96 of 128 cores used per node with pinning and correct displacemt. resulting in 288 mpiprocs

mpirun -np 288 omplace -c `distribute_by_fraction.py 96 128` pimpleFoam -parallel > "${timestamp}_log_pimpleFoam.txt" 2>&1

# using 3 nodes 384 mpiprocs one would use:

# mpirun -np 384 pimpleFoam -parallel > "${timestamp}_log_pimpleFoam.txt" 2>&1

Several versions are available and can be listed via the following command:

module avail foam