- Infos im HLRS Wiki sind nicht rechtsverbindlich und ohne Gewähr -

- Information contained in the HLRS Wiki is not legally binding and HLRS is not responsible for any damages that might result from its use -

CRAY XC40 Hardware and Architecture: Difference between revisions

From HLRS Platforms

Jump to navigationJump to search

(adding a first quick version of the next Phase (2?)) |

|||

| Line 1: | Line 1: | ||

== Installation step 2a (hornet) == | == Installation step 2a (hornet) == | ||

=== Summary Phase 1 Step 2 === | |||

{|Class=wikitable | |||

|- | |||

! Cray Cascade [http://www.cray.com/Products/Computing/XC.aspx XC40] Supercomputer | |||

! Step 2 | |||

|- | |||

| Performance | |||

* Peak | |||

* HPL | |||

| <BR> | |||

3.79 Pflops<BR> | |||

2.76 Pflops | |||

|- | |||

| Cray Cascade Cabinets | |||

| 21 | |||

|- | |||

| Number of Compute Nodes | |||

| 3944 (dual socket) | |||

|- | |||

| Compute Processors | |||

* Total number of CPUs | |||

* Total number of Cores | |||

| <BR> | |||

3944*2= 7888 Intel Haswell [http://ark.intel.com/products/81908/Intel-Xeon-Processor-E5-2680-v3-30M-Cache-2_50-GHz E5-2680v3] 2,5 GHz, 12 Cores, 2 HT/Core<BR> | |||

7888*12= 94656 | |||

|- | |||

| Compute Memory on Scalar Processors | |||

* Memory Type | |||

* Memory per Compute Node | |||

* Total Scalar Compute Memory | |||

| <BR> | |||

DDR4 <BR> | |||

128GB <BR> | |||

504832GB= 505TB <BR> | |||

|- | |||

| Interconnect | |||

| Cray Aries | |||

|- | |||

| User Storage | |||

* Lustre Workspace Capacity | |||

| <BR> | |||

5.4PB | |||

|- | |||

|- | |||

|} | |||

For detailed information see [https://www.hlrs.de/fileadmin/_assets/events/workshops/XC40_Intro_2014-09.pdf XC40-Intro] | |||

=== Summary Phase 1 Step 2a === | === Summary Phase 1 Step 2a === | ||

{|Class=wikitable | {|Class=wikitable | ||

| Line 13: | Line 64: | ||

|- | |- | ||

| Number of Compute Processors | | Number of Compute Processors | ||

| 328 Intel SandyBridge 2,6 GHz, 8 Cores | * Total number of Cores | ||

| <BR> | |||

328 Intel SandyBridge 2,6 GHz, 8 Cores<BR> | |||

94656 | |||

|- | |- | ||

| Compute Memory on Scalar Processors | | Compute Memory on Scalar Processors | ||

Revision as of 14:45, 1 October 2014

Installation step 2a (hornet)

Summary Phase 1 Step 2

| Cray Cascade XC40 Supercomputer | Step 2 |

|---|---|

Performance

|

3.79 Pflops |

| Cray Cascade Cabinets | 21 |

| Number of Compute Nodes | 3944 (dual socket) |

Compute Processors

|

3944*2= 7888 Intel Haswell E5-2680v3 2,5 GHz, 12 Cores, 2 HT/Core |

Compute Memory on Scalar Processors

|

DDR4 |

| Interconnect | Cray Aries |

User Storage

|

5.4PB |

For detailed information see XC40-Intro

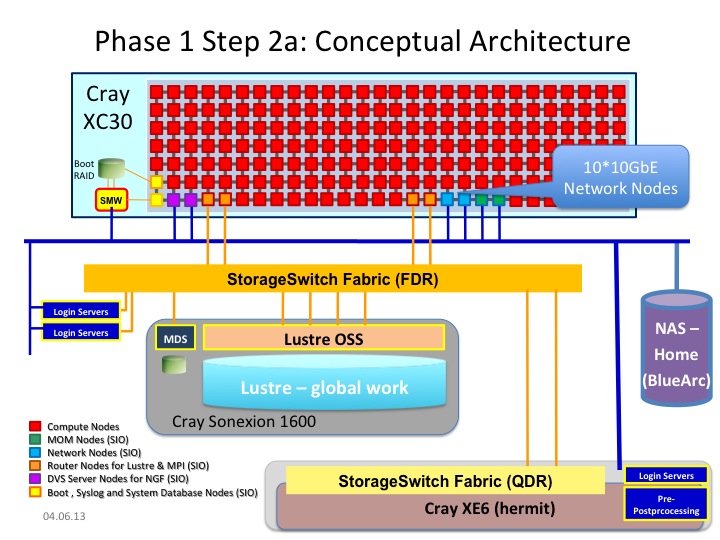

Summary Phase 1 Step 2a

| Cray Cascade XC30 Supercomputer | Step 2a |

|---|---|

| Cray Cascade Cabinets | 1 |

| Number of Compute Nodes | 164 |

Number of Compute Processors

|

328 Intel SandyBridge 2,6 GHz, 8 Cores |

Compute Memory on Scalar Processors

|

DDR3 1600 MHz |

| I/O Nodes | 14 |

| Interconnect | Cray Aries |

| External Login Servers | 2 |

| Pre- and Post-Processing Servers | - |

User Storage

|

(330TB) |

Cray Linux Environment (CLE)

|

Yes |

| PGI Compiling Suite (FORTRAN, C, C++) including Accelerator | 25 user (shared with Step 1) |

Cray Developer Toolkit

|

Unlimited Users |

Cray Programming Environment

|

Unlimited Users |

| Alinea DDT Debugger | 2048 Processes (shared with Step 1) |

| Lustre Parallel Filesystem | Licensed on all Sockets |

Intel Composer XE

|

10 Seats |

Architecture

- System Management Workstation (SMW)

- system administrator's console for managing a Cray system like monitoring, installing/upgrading software, controls the hardware, starting and stopping the XC30 system.

- service nodes are classified in:

- login nodes for users to access the system

- boot nodes which provides the OS for all other nodes, licenses,...

- network nodes which provides e.g. external network connections for the compute nodes

- Cray Data Virtualization Service (DVS): is an I/O forwarding service that can parallelize the I/O transactions of an underlying POSIX-compliant file system.

- sdb node for services like ALPS, torque, moab, slurm, cray management services,...

- I/O nodes for e.g. lustre

- MOM nodes for placing user jobs of the batch system in to execution

- compute nodes

- are only available for user using the batch system and the Application Level Placement Scheduler (ALPS), see running applications.

- The compute nodes are installed with 64 GB memory, each with fast interconnect (CRAY Aries).

- Details about the interconnect of the Cray XC series network

- are only available for user using the batch system and the Application Level Placement Scheduler (ALPS), see running applications.

- in future, the StorageSwitch Fabric of step2a and step1 will be connected. So, the Lustre workspace filesystems can be used on both hardware (Login servers and preprocessing servers) of step1 and step2a.